67% of Mainframe Data Migrations Fail. A CTO's Survival Guide.

Choosing a mainframe data migration strategy isn’t a technical decision; it’s a high-stakes business bet. A correct call can unlock agility. A wrong one adds your project to the high percentage of failures, plagued by budget overruns and operational chaos.

The options typically narrow to four paths: Rehost (lift-and-shift), Replatform (lift-and-reshape), Refactor (re-architect), and Replace (rip-and-replace).

The Unspoken Reality of Mainframe Data Migration

Most mainframe data migration projects fail. Industry analysis indicates that 67% of these initiatives do not achieve their stated goals. The primary cause? Underestimating deep-seated technical complexities.

This isn’t a standard ETL task. It’s an exercise in managing a vast number of details that can destroy a budget. A common failure point is the loss of decimal precision when converting COBOL COMP-3 packed decimals to modern floating-point numbers. This seemingly minor technical detail can trigger multimillion-dollar financial miscalculations.

This guide is for CTOs and tech leaders who need to see past vendor marketing and understand the real risks and rewards of each data migration strategy.

Four Paths to Modernization

Every mainframe data migration strategy fits into one of four categories. Each carries a distinct risk profile, cost, and timeline.

- Rehost: The classic “lift-and-shift.” You move the application and its data to a new environment, such as a cloud-based mainframe emulator, with minimal code changes. It’s fast, but it only moves existing technical debt to a different location.

- Replatform: An incremental improvement over rehosting. This involves minor modifications to better suit the new platform, like migrating a DB2 database to a managed cloud service such as Amazon RDS.

- Refactor: A significant undertaking. This involves substantial code and architectural changes, such as rewriting COBOL applications in Java or decomposing a monolithic application into microservices.

- Replace: The most drastic option. You decommission the legacy application entirely and substitute it with a COTS package or a new custom-built solution.

Treating mainframe data migration as a simple data transfer exercise is a critical error. It is an exercise in reverse-engineering decades of undocumented business logic embedded in proprietary file formats (VSAM), character encodings (EBCDIC), and cryptic data structures.

Comparing Core Strategies at a Glance

Understanding the trade-offs is the first step in avoiding project failure. The decision balances speed, cost, and long-term value. Exploring the most common mainframe to cloud migration challenges is a critical next step in this process.

| Strategy | Primary Goal | Typical Risk | Cost Profile |

|---|---|---|---|

| Rehost | Speed and immediate hardware savings | Low | Low |

| Replatform | Moderate modernization with low effort | Medium | Medium |

| Refactor | Achieve true agility and eliminate tech debt | High | High |

| Replace | Adopt modern software and processes | Very High | Very High |

Beyond the challenges of migrating live data, a frequently overlooked necessity is understanding data sanitization for the systems being decommissioned. Simply turning them off is not a viable option.

Comparing The Four Core Migration Strategies

Selecting a mainframe data migration strategy is a trade-off between cost, risk, speed, and future value. Each of the four main paths—Rehost, Replatform, Refactor, and Replace—is suited to a different business context, from a tactical “exit the data center now” directive to a comprehensive digital transformation. A superficial comparison is insufficient. You must weigh these options against your organization’s tolerance for risk and disruption.

An incorrect choice has significant consequences. Opting for the lowest upfront cost could lock the organization into another decade of technical debt. Aiming for a complete rewrite without the necessary budget or risk appetite sets the stage for project failure.

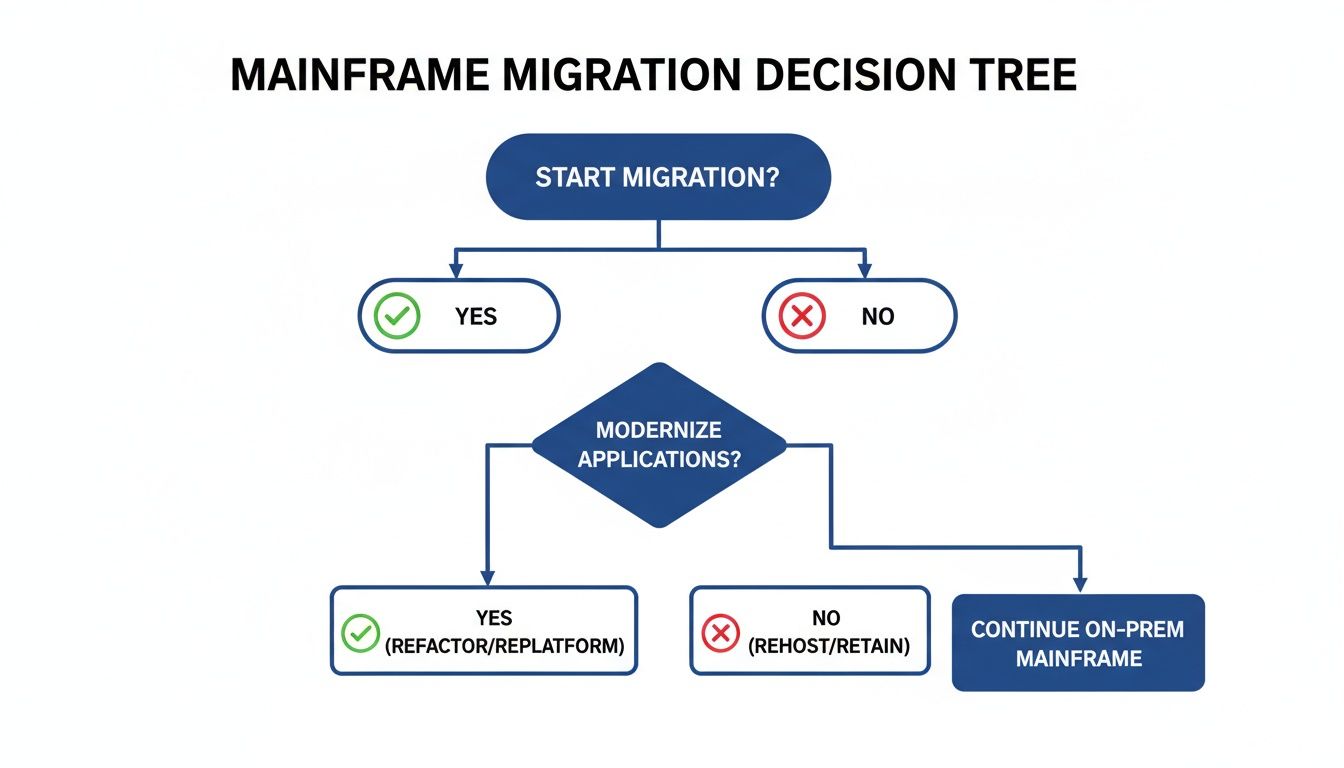

This decision tree helps frame the initial, high-level choices you’re facing.

As shown, the first question is a fundamental “Go/No-Go” on the migration itself. From there, the path branches based on primary drivers—optimizing for speed, cost, or a deep modernization of capabilities.

Detailed Strategy Breakdown

A direct comparison reveals the stark differences in investment and return. Rehosting offers a quick win for eliminating expensive hardware, while Refactoring is a strategic, multi-year bet on future agility that requires a much larger commitment of time and resources.

Mainframe Data Migration Strategy Comparison

This table outlines the typical investment, timeline, risk profile, and ideal use case for each of the four core strategies. Note the direct correlation between the level of investment and the degree of modernization achieved.

| Strategy | Typical Cost Range | Average Timeline | Technical Risk Profile | Best-Fit Scenario |

|---|---|---|---|---|

| Rehost | $0.75 - $1.50 per LOC | 6-12 months | Low to Medium | Urgent data center exit or immediate hardware cost reduction. No changes to business logic are needed. |

| Replatform | $1.00 - $2.50 per LOC | 9-18 months | Medium | Moving from a mainframe DB2 to a managed cloud database (e.g., Amazon RDS) to gain operational efficiencies. |

| Refactor | $2.00 - $5.00+ per LOC | 18-36+ months | High | Breaking a monolithic COBOL application into microservices to enable digital product innovation and agility. |

| Replace | Varies widely, often $5M+ software license | 24-48+ months | Very High | A core business function is better served by a modern COTS package (e.g., Salesforce for CRM). |

The data reveals a clear pattern: lower upfront costs and shorter timelines tend to unlock less business value. Strategies that deliver lasting change carry significantly higher execution risk and require a multi-year commitment from the entire organization.

Rehost: The Lift-And-Shift

Rehosting is the quickest method to move applications and data off a physical mainframe. The process involves moving everything to a mainframe emulator on commodity hardware, either on-premise or in the cloud, with almost no code changes. This is a tactical infrastructure move, not a modernization strategy.

- When to Use It: The driver is typically a non-negotiable deadline, such as an expiring data center lease or hardware reaching end-of-life. It’s a triage measure to stop financial drain from expensive MIPS consumption.

- The Downside: You are only moving your technical debt. The monolithic architecture, legacy code, and rigid operational processes are carried over. No business agility is gained.

Replatform: The Lift-And-Reshape

Replatforming involves targeted changes to leverage a new platform’s features without a complete application rewrite. A common example is migrating a mainframe DB2 database to a managed cloud service like Amazon RDS for Db2. The application code remains largely unchanged, but the database is now managed, patched, and scaled by the cloud provider.

This represents a pragmatic middle ground. It provides tangible benefits—such as reduced database administration overhead and improved scalability—without the high risk and expense of a full refactor.

Replatforming is often the optimal choice for organizations that find a full rewrite too risky or expensive but recognize that a simple rehost doesn’t address underlying platform management issues.

Refactor: The Strategic Rewrite

This is where true modernization starts. Refactoring involves fundamentally rewriting or re-architecting significant parts of the application and its data structures. The goal is to eliminate technical debt, improve system agility, and align with modern architectural patterns like microservices.

- When It Makes Sense: You refactor when the legacy system becomes a direct impediment to business growth. If the mainframe monolith’s inflexibility prevents launching new products or integrating with partners, refactoring becomes a strategic imperative.

- The Risk Factor: This is a high-risk, high-reward initiative. Our data indicates these projects have high failure rates, often exceeding 70% without disciplined management. Scope creep and the failure to fully reverse-engineer decades of embedded business logic are the primary causes of failure.

Replace: The COTS Solution

Replacing involves decommissioning the legacy system entirely and migrating its data and processes to a commercial-off-the-shelf (COTS) product. For instance, an insurance company might replace its custom claims processing system with a specialized SaaS platform.

This is the most disruptive option. It requires re-engineering business processes to align with the vendor’s software. The data migration alone is a significant task, requiring mapping decades of custom mainframe data schemas to the rigid structure of the new system. This path is typically chosen when a business function is no longer a core competitive differentiator and an industry-standard solution is sufficient.

Technical Deep Dive Into Common Data Format Pitfalls

High-level strategy is one part of the equation; execution at the data layer is another. A significant number of mainframe migration projects—some data suggests over 67%—fail not because of a flawed plan, but due to technical details at the data layer that were overlooked.

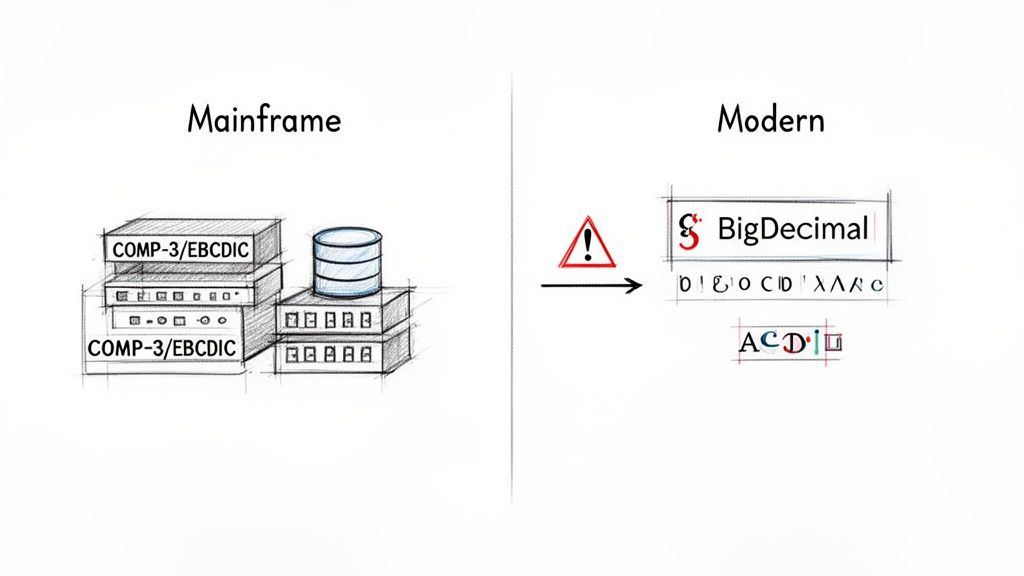

This is where theory meets the reality of implementation. The impedance mismatch between mainframe and modern data representation is substantial. For any technical leader, understanding these pitfalls is essential for accurately scoping discovery, assessing vendor claims, and avoiding silent data corruption that can compromise the new system from its inception.

This is not a simple byte-moving exercise. It is a translation between fundamentally different data paradigms.

Let’s examine the code-level traps your engineers will likely encounter.

The COMP-3 Decimal Precision Trap

This is one of the most common and financially damaging mistakes. It centers on COBOL’s packed decimal format, or COMP-3. Mainframes use fixed-point arithmetic for financial calculations because it guarantees perfect decimal precision, eliminating rounding errors.

Modern languages like Java and C# default to floating-point numbers (float, double), which cannot represent many decimal values with perfect accuracy. Mapping a COMP-3 field to a double is a formula for failure. Small, invisible rounding errors will accumulate over millions of transactions, leading to financial discrepancies that can be difficult or impossible to reconcile.

Consider this COBOL copybook definition for a transaction amount:

01 TRANSACTION-RECORD.

05 TRANS-AMOUNT PIC S9(13)V99 COMP-3.This defines a signed, 15-digit number with two decimal places, packed into 8 bytes. An inexperienced team might map this directly to a Java double. This is incorrect.

The correct approach in Java is to use java.math.BigDecimal. It was designed specifically for arbitrary-precision signed decimal numbers.

// WRONG: This will introduce floating-point errors.

double transAmount = someConversionFunction(cobolComp3Bytes);

// CORRECT: This maintains exact decimal precision.

BigDecimal transAmount = convertComp3ToBigDecimal(cobolComp3Bytes);Any vendor or team suggesting the use of floating-point types for mainframe financial data likely lacks the necessary experience. Using

BigDecimalis not a “best practice”; it is the only acceptable method. This should be a day-one due diligence question.

EBCDIC to ASCII Character Encoding

Another common pitfall is character set conversion. Mainframes use EBCDIC (Extended Binary Coded Decimal Interchange Code), while most other systems use ASCII or its superset, UTF-8.

While standard utilities can convert most characters, failures often occur in specific areas:

- Control Characters: EBCDIC and ASCII have different non-printable control characters. A direct mapping can introduce garbage data, causing parsers to fail.

- Packed Data: If a conversion tool treats an entire file as text, it will corrupt any fields containing packed decimals (

COMP-3) or binary data. These fields must be handled with binary-aware logic before any character conversion. - Sort Order: The collating sequence is entirely different. In EBCDIC, numbers follow letters. In ASCII, they precede them. This can break business logic that depends on a specific sort order of alphanumeric keys.

This requires a field-by-field, data-type-aware strategy for every record layout, not a simple file conversion.

The Complexity of COBOL REDEFINES

The REDEFINES clause in COBOL copybooks allows a single memory area to be interpreted through multiple data structures. It’s often used to handle different record types within the same file.

For example, a transaction file might contain a header, detail records, and a trailer, all occupying the same physical byte space but with different structures.

01 INPUT-RECORD.

05 RECORD-TYPE PIC X(1).

05 HEADER-RECORD REDEFINES INPUT-RECORD.

10 FILE-NAME PIC X(8).

10 CREATE-DATE PIC X(8).

05 DETAIL-RECORD REDEFINES INPUT-RECORD.

10 ACCOUNT-ID PIC 9(10).

10 TRAN-AMOUNT PIC S9(13)V99 COMP-3.To migrate this data, a single schema cannot be applied. The migration logic must first read the RECORD-TYPE field and then apply the correct structure (HEADER-RECORD or DETAIL-RECORD) to parse the remaining bytes. Applying one schema to the entire file will result in data corruption.

Modern formats like JSON or Avro can handle this conditional structure, but the migration tooling must be capable of building and executing this interpretive logic. For a deeper look into these nuances, exploring the best practices for data migration can provide essential clarity.

Parsing Hierarchical and Indexed Data Structures

Not all mainframe data resides in DB2 tables. Many core systems use hierarchical databases like IMS or indexed file systems like VSAM.

- IMS DB: This hierarchical database organizes data in parent-child segments. Migrating IMS data to a relational model is a significant data modeling effort. Each segment typically becomes its own table, requiring careful creation of primary and foreign keys to rebuild relationships. This often necessitates data denormalization to achieve acceptable query performance.

- VSAM KSDS: A Key-Sequenced Data Set is an indexed file system optimized for fast key-based access. When migrating a KSDS, it’s not enough to move the data and recreate the primary key. All alternate indexes must also be identified and rebuilt to support existing application access patterns, or performance in the new system will be severely degraded.

A thorough technical discovery phase must produce an exhaustive inventory of every data source, its format, and its access patterns. Underestimating the complexity of these non-relational structures is a primary cause of project delays and budget overruns.

The Business Case For Mainframe Modernization

Discussions about mainframe migration often focus on technical details like data formats and COBOL conversion. However, the decision to modernize is primarily a business one. A CFO is not concerned with EBCDIC to ASCII conversion; they need to understand how a multi-million dollar project will deliver tangible business outcomes.

Stating “the mainframe is old” does not constitute a business case. The real justification comes from demonstrating how unlocking mainframe data provides a competitive advantage and reduces operational risk. The conversation must shift from managing technical debt to enabling business growth.

The market reflects this trend. Mainframe modernization services are projected to reach USD 13.34 billion by 2030, with a 10% CAGR. This isn’t just about decommissioning hardware; it’s a strategic shift to hybrid models. In fact, 54% of users plan to increase mainframe usage while modernizing data access. Budgets are increasing by 15% year-over-year to support this cloud integration. You can find more of these mainframe modernization statistics on amraandelma.com.

Translating Migration Into Business Value

A data migration project is a foundational element of broader digital transformation goals. To secure buy-in, the value must be framed in terms of revenue, risk, and efficiency.

Consider Itaú Bank, which migrated its credit card platform to AWS. The project resulted in a 55% reduction in transaction response time and increased availability to 99.98%. For a bank, these metrics translate directly to improved customer experience, reduced churn, and minimized revenue loss from outages.

Modernization transforms mainframe data from a siloed, batch-oriented liability into a real-time asset. The business case is built on what can be done with the data once it’s accessible—not just on the cost savings from decommissioning hardware.

When building the business case, remember to include the full hardware lifecycle, which involves a secure and responsible IT Asset Disposition (ITAD) process.

Unlocking New Capabilities

Modernizing access to mainframe data unlocks capabilities that are difficult to implement in a traditional, locked-down environment. This is often the most compelling part of the business case.

Three key opportunities typically emerge:

- Real-Time Analytics: Freeing transaction data from batch processing allows it to be streamed into modern data lakes or warehouses. This enables real-time fraud detection, dynamic pricing models, and immediate insights into customer behavior.

- AI and Machine Learning: Training effective AI/ML models requires access to large, clean datasets. Migrating or replicating mainframe data to a cloud environment allows the use of powerful ML platforms to build predictive maintenance schedules, personalize customer offers, or optimize supply chains.

- API-Driven Ecosystems: A successful migration strategy exposes core business logic and data through modern APIs. This enables faster partner integrations, the launch of new mobile and web applications, and the creation of new revenue streams by turning legacy assets into digital services.

How To Select Your Migration Partner

Selecting the right implementation partner is more critical than choosing the migration strategy itself. Technical incompetence, particularly with legacy mainframe data formats, is a major contributor to the 67% project failure rate. The market contains many generalist IT firms that claim mainframe expertise but often lack the deep, battle-tested knowledge required for a low-risk project.

During due diligence, your task is to see through marketing claims and identify genuine technical depth. A presentation on “cloud-native synergy” is irrelevant if the lead engineer cannot explain how they prevent decimal precision errors when migrating COBOL COMP-3 data. A generic promise of “data integrity” is meaningless without a proven methodology for reconciling a VSAM KSDS with its alternate indexes.

The goal is to quickly differentiate the specialists from the generalists.

Beyond The Standard Request For Proposal

Standard RFPs often yield generic, pre-written responses. To properly vet a partner for a project of this complexity, you must ask specific, technical questions that act as filters.

Here are targeted questions to ask every potential partner. An inability to provide specific, confident answers is a significant red flag.

Technical Competency Questions

1. Describe your process for handling COBOL COMP-3 data to prevent precision loss. What target data types do you use and why?

- Good Answer: “We map all

COMP-3fields tojava.math.BigDecimalor an equivalent arbitrary-precision decimal type. Using floating-point types likedoubleis a non-starter for us due to the unacceptable risk of rounding errors.” - Bad Answer: “Our automated tools handle all data type conversions seamlessly.” (This is an evasion.)

2. Provide a case study where you migrated a VSAM KSDS with multiple alternate indexes. How did you ensure all access paths were replicated to maintain application performance?

- Good Answer: “For a retail client, we mapped their KSDS primary key to a relational primary key and each of the three alternate indexes to secondary indexes on the target database. We validated performance with parallel run testing on key transaction paths before cutover.”

- Bad Answer: “We typically move VSAM data to flat files or a NoSQL database.” (This indicates a lack of understanding of performance implications.)

3. How does your methodology account for COBOL REDEFINES clauses during data discovery and migration?

- Good Answer: “Our tooling parses COBOL copybooks to identify all

REDEFINES. The migration logic first checks the record type indicator field, then dynamically applies the correct record layout for parsing. Using a single schema is guaranteed to corrupt data.” - Bad Answer: “We work with your SMEs to understand the different record layouts.” (This implies they lack automated tools and will heavily rely on your team’s time.)

A vendor’s reluctance to discuss technical details is a clear indication that they are out of their depth. If they cannot speak specifically about data formats like

COMP-3or file structures like IMS DB, they are not qualified to handle your core systems.

Vetting Methodologies and Verifying Track Records

Beyond technical questioning, you must investigate their processes and evidence of past performance. A competent partner can substantiate their claims with more than a client logo on a presentation slide.

Methodology and Proof Points:

- Data Validation and Reconciliation: Request their specific, tool-assisted methodology. Do they use checksums and record counts? Do they perform financial reconciliation reports? Critically, do they conduct parallel runs, comparing outputs from the legacy and new systems to prove functional equivalence?

- Specialized Expertise: Verify their experience with your exact technology stack. Migrating a hierarchical IMS database to a relational model is fundamentally different from a DB2 migration. A partner with deep IMS expertise may be unqualified for a DB2 project, and vice versa.

- Referenceable Case Studies: Insist on speaking with the technical leads from their previous clients who had a similar technology stack. Ask direct questions: What went wrong? How did the vendor address unexpected issues? Were there any data integrity problems post-go-live?

For a more structured evaluation, use a formal checklist to ensure all critical areas are covered. Our detailed vendor due diligence checklist can help you organize your questions and systematically compare potential partners.

Remember, selecting a partner is not a procurement exercise; it is the most critical risk mitigation step you will take.

When to Avoid Migrating Your Mainframe Data

The push for modernization can be compelling, but a default “migrate everything” approach is not a strategy; it’s a sign of inexperience. In some cases, the most prudent decision is to leave a stable, high-performance system in place.

The business case for a mainframe migration may appear strong at a high level but can fall apart under detailed scrutiny. When the risk and cost far outweigh the tangible benefits, the project should be re-evaluated. This is particularly true for stable, back-office systems with no urgent business need for change.

Systems with Extreme Performance Demands

Some mainframe applications, particularly in high-frequency trading or global payment clearing, operate with sub-millisecond latency that distributed cloud environments often cannot replicate without a complete architectural redesign, which can be technically infeasible or prohibitively expensive.

If a system processes $1 trillion in transactions daily with five-nines availability and sub-millisecond response times, the risk of disrupting it for marginal gains is often unjustifiable. A primary rule of modernization should be “do no harm.”

When Business Logic is Stable and Dependencies Unknown

Consider a 30-year-old COBOL batch process that runs complex insurance risk models. It has operated without failure for years, but the original developers have retired, and the documentation is unreliable.

This might seem like a prime target for modernization, but it’s a trap. The risk of misinterpreting decades of undocumented, nuanced business rules during a rewrite is extremely high. A migration project in this scenario could cost $5M - $10M with a significant chance of introducing subtle, catastrophic calculation errors that may not be discovered for months.

If the system functions reliably and does not impede business progress, retaining it is the most prudent financial decision. The ROI on a rewrite is often negative. A lower-risk strategy is to modernize around the core by building APIs to expose its functionality.

Common Questions from the Trenches

Complex mainframe migrations raise many practical and difficult questions. Here are direct answers to some of the most common ones from technical leaders.

What’s the Real Reason These Projects Go Over Budget?

The most common reason for budget overruns—in nine out of ten cases—is a failure to accurately map the data landscape before the project begins. The high 67% failure rate is often due to teams being surprised by the complexity hidden in legacy data structures and undocumented business rules.

When the initial discovery phase is rushed, critical tripwires are missed. These include COBOL REDEFINES creating multiple record layouts in a single file, non-standard EBCDIC character sets, or dependencies on obscure VSAM alternate indexes. Discovering these issues mid-project leads to scope creep, emergency re-architecting, and spiraling costs.

How Do You Actually Prove the Data Is Correct After the Move?

Matching record counts is insufficient. True data integrity requires a multi-faceted approach to prove that the new system behaves identically to the old one.

Your validation playbook should include:

- Checksums and Hash Totals: Generate checksums on key numeric fields or entire records. A mismatch between the source and target hash indicates data corruption.

- Parallel Runs: This is the gold standard. For a defined period, run both the old and new systems in parallel with identical production inputs. Meticulously compare the outputs to identify discrepancies in business logic, not just data format.

- Third-Party Audits: In regulated industries like finance or insurance, this is often mandatory. An external firm performs an independent audit of the migrated data and processes, providing an unbiased validation.

Never trust a vendor’s internal validation report without independent verification. A parallel run is the only way to ensure data integrity before decommissioning the mainframe.

Can We Really Do This Without Taking the Whole Application Down for a Weekend?

Yes, but it requires moving away from the “big bang” cutover approach. Taking a critical application offline for an extended period introduces unacceptable business risk.

The modern method is to use Change Data Capture (CDC). CDC tools monitor the mainframe database and replicate every change to the new system in near real-time. This allows the initial bulk data load to be performed weeks in advance, while the CDC process keeps the target system synchronized. At cutover, the actual outage is reduced to the few minutes needed to redirect traffic, minimizing business disruption.