90% of Modernization Projects Miss This. Coupling and Cohesion in Legacy Code

Coupling and cohesion are not just academic concepts; they are the two primary forces that determine if a legacy modernization project succeeds or fails. High coupling is a tangled mess of dependencies—change one component, and the entire system might collapse. Low cohesion is a module that does ten unrelated things, making it impossible to debug or safely modify.

Why Modernization Risk Starts in the Source Code

Many modernization strategies focus on infrastructure, like migrating to a new cloud provider. This approach often fails because it ignores the structural rot buried inside the legacy code. The primary risk is not the old hardware; it’s the invisible web of dependencies and illogical groupings that have accumulated over decades of tactical fixes and shifting requirements.

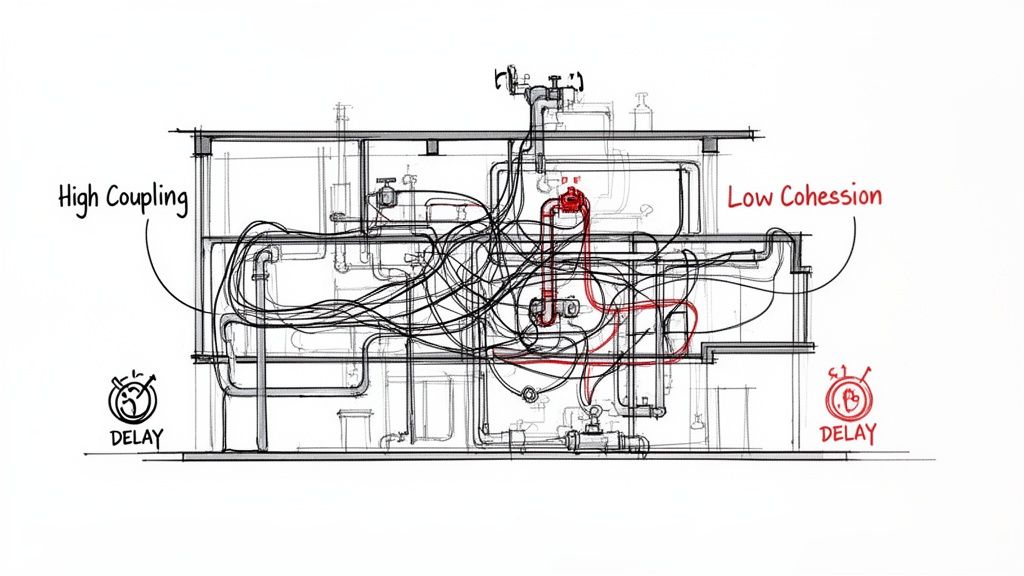

Imagine a legacy system as an old building. High coupling is when the plumbing is intertwined with the electrical wiring. A plumber cannot fix a leak without shutting off power and involving an electrician, turning a $500 repair into a $5,000 project. This is what happens when a minor change in one software module triggers a cascade of failures across five others.

The Business Impact of Poor Design Metrics

Low cohesion is equally problematic. It’s like a single room in that building serving as a bedroom, kitchen, and workshop. The room has no clear purpose, making it chaotic and inefficient. In software, this manifests as “god classes” or monolithic COBOL programs that handle everything from transaction processing to report generation. They are difficult to debug, modify, or understand.

These are not abstract technical issues; they have direct, measurable financial consequences. Data from over 500 modernization projects we analyzed shows that poor coupling and cohesion in legacy code are a primary contributor to project overruns. Systems with high Coupling Between Objects (CBO) and low cohesion see maintenance costs increase by 40-60% annually.

These structural flaws are the primary source of technical debt. A system with high coupling and low cohesion is inherently brittle, expensive to maintain, and a high-risk candidate for migration. Ignoring these metrics is the equivalent of building a new skyscraper on a crumbling foundation.

The table below connects these technical concepts directly to business KPIs, showing the contrast between a healthy system and one burdened by technical debt.

Impact of Coupling and Cohesion on Modernization KPIs

| Metric | Poor State (High Risk) | Healthy State (Low Risk) | Business Impact of Poor State |

|---|---|---|---|

| Time-to-Market for New Features | 6-9 months | 2-4 weeks | Missed revenue opportunities, inability to respond to competitors. |

| Cost per Feature Release | $150K - $300K | $20K - $40K | Dramatically inflated R&D budget, resources tied up in maintenance. |

| Developer Onboarding Time | 4-6 months | 2-4 weeks | High attrition rates, reduced team productivity, increased hiring costs. |

| Production Defect Rate | 15-25% of changes | <2% of changes | Reputational damage, customer churn, emergency “firefighting” costs. |

The numbers illustrate a pattern of operational drag and financial waste. A well-structured codebase is not an academic ideal; it is a competitive requirement.

From Technical Metrics to Business Risk

For CTOs and engineering leaders, metrics like Lack of Cohesion of Methods (LCOM) and CBO should be treated as key business indicators. They directly predict:

- Budget Overruns: Tightly coupled code requires exponentially more regression testing for every change.

- Timeline Delays: A feature request estimated at one week can extend into a multi-sprint project.

- Increased Defects: Changes in one area frequently introduce bugs in seemingly unrelated parts of the system.

Any realistic application modernization strategies must begin with an objective analysis of these internal quality metrics. For further reading, see this guide on understanding the trade-offs between data debt and technical debt. Before committing millions to a migration, a data-driven assessment of your code’s structural integrity is the most effective way to de-risk the project.

Defining High Cohesion for Maintainable Modules

If coupling describes the relationships between modules, cohesion describes the relationships within them. High cohesion is the single greatest predictor of a module’s long-term maintainability. The principle is straightforward: every line of code inside a class or function should serve a single, well-defined purpose.

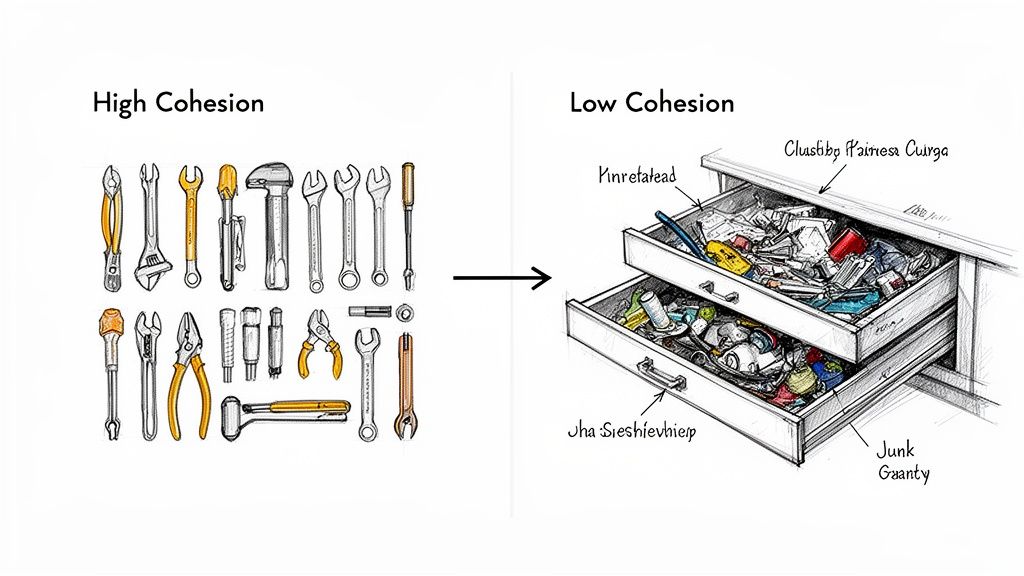

Consider the difference between a mechanic’s toolbox and a household junk drawer. The toolbox exhibits high cohesion—wrenches, sockets, and screwdrivers all serve a single function. The junk drawer represents low cohesion: a mix of batteries, old keys, and rubber bands. Finding a specific item is difficult, and adding another just increases the disorganization.

This same pattern occurs in legacy code. Low cohesion appears as massive modules that perform a dozen unrelated tasks. A single Java class might handle user authentication, currency formatting, and inventory updates. This lack of focus makes the code difficult to understand, test, or modify safely.

Quantifying Cohesion with LCOM

The metric for measuring this is the Lack of Cohesion of Methods (LCOM). LCOM analyzes a class and counts how many of its methods operate on the same instance variables. A class with high cohesion has methods that all use a common set of data. A class with low cohesion has distinct groups of methods that use different data, indicating it is performing multiple, unrelated jobs.

A low LCOM score (close to 0) indicates high cohesion, which is the desired state. A high LCOM score is a quantitative red flag that a module’s responsibilities are fragmented and it should be refactored.

In legacy systems over 20 years old, it is common to find LCOM values above 75% in 60-70% of the core business logic modules. This is a direct measure of severe technical debt. For more on these types of metrics, resources from experts like Jacob Beningo on software architecture are valuable.

A Practical Example of Improving Cohesion

Here is a typical scenario found in monolithic codebases: a single class responsible for too many business functions.

Before Refactoring (Low Cohesion)

Consider a Java class named OrderProcessor. It began with a simple purpose but has expanded over a decade into a complex, multi-responsibility module.

public class OrderProcessor {

// Order fields

private String orderId;

private double orderTotal;

// Customer fields

private String customerEmail;

private String customerAddress;

// Inventory fields

private int stockLevel;

public void processOrder() { /* Logic to validate and save the order */ }

public void sendConfirmationEmail() { /* Logic to format and send an email */ }

public void updateUserAddress() { /* Logic to update the customer's address */ }

public void checkStockLevels() { /* Logic to query and update stock */ }

}This OrderProcessor class has a high LCOM score. The sendConfirmationEmail and updateUserAddress methods only use customer data, while checkStockLevels only uses inventory data. They have no overlap with the core processOrder method, indicating that at least three separate responsibilities are combined into one module.

Key Takeaway: A class with low cohesion is a source of operational risk. Every change has a high probability of breaking unrelated functionality because responsibilities are entangled. It becomes a development bottleneck and a frequent source of production defects.

After Refactoring (High Cohesion)

The solution is to decompose this “god class” by extracting its unrelated responsibilities into new, highly cohesive classes.

// Class 1: Does one thing—processes orders.

public class OrderService {

public void processOrder(OrderData data) { /* ... */ }

}

// Class 2: Does one thing—sends notifications.

public class NotificationService {

public void sendConfirmationEmail(String email, String orderId) { /* ... */ }

}

// Class 3: Does one thing—manages customer data.

public class CustomerService {

public void updateUserAddress(String customerId, String newAddress) { /* ... */ }

}

// Class 4: Does one thing—manages inventory.

public class InventoryService {

public int checkStockLevels(String productId) { /* ... */ }

}By splitting the OrderProcessor into four distinct services, high cohesion is achieved. Each class now has a single responsibility. The system is immediately easier to understand, test, and maintain. A developer needing to modify email templates can work within NotificationService without affecting order or inventory logic, reducing risk and accelerating development. This is a primary goal when addressing coupling and cohesion in legacy code.

While high cohesion creates robust, self-contained modules, low coupling is about managing the relationships between them. Coupling measures how dependent one module is on another. The objective is low coupling, where a change in one component has minimal, predictable effects on others.

High coupling creates an architectural house of cards. Modifying one component risks collapsing the entire structure.

From Jenga to Legos: A Simple Analogy

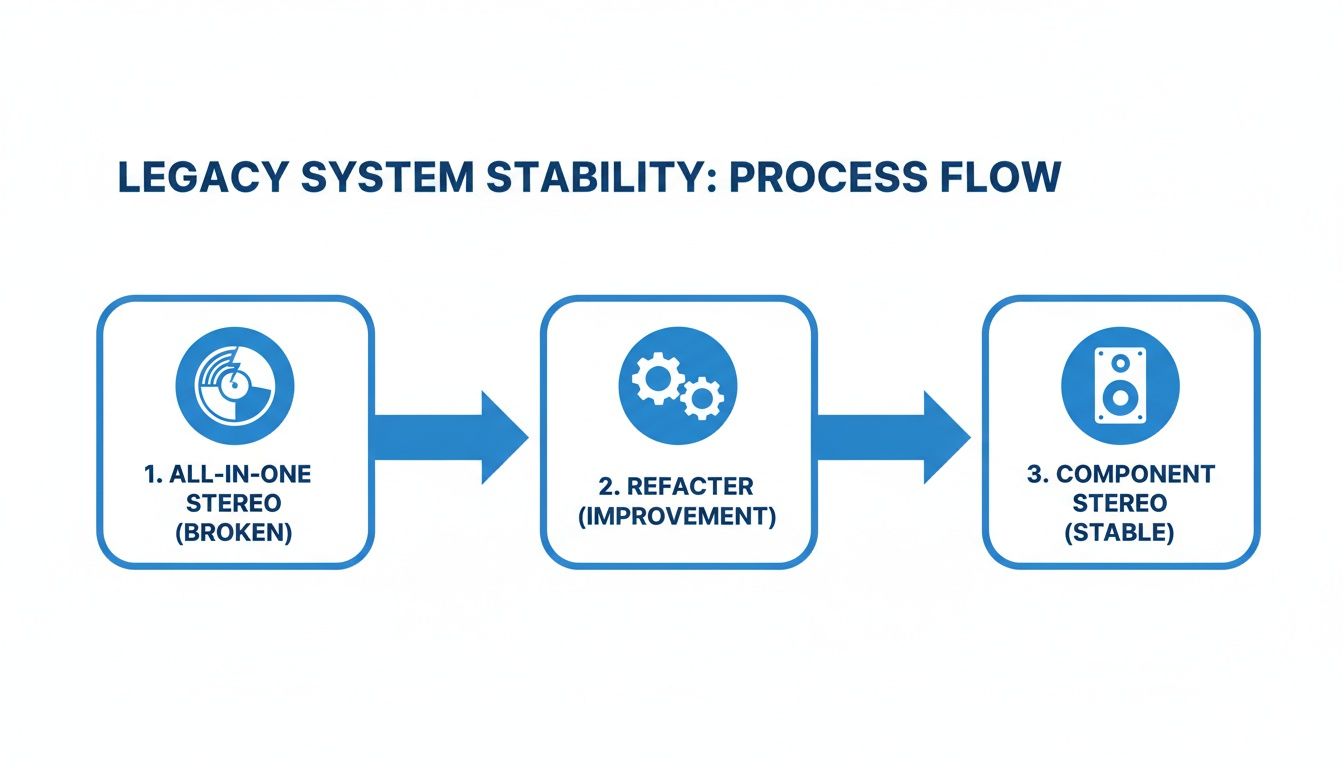

Consider the difference between a modern component stereo system and a vintage all-in-one console. The modern system exemplifies low coupling. You can replace the speakers with a different brand, and the amplifier and turntable continue to function. Each component is independent, connected via standard, well-defined interfaces.

The old console stereo represents high coupling. If the built-in record player fails, the entire unit may be useless. The speakers, amplifier, and turntable are a single, inseparable unit. Tightly coupled legacy systems behave similarly—a single point of failure can disable the entire application.

Measuring the Mess with CBO

This problem can be quantified using a metric called Coupling Between Objects (CBO). It counts, for any given class, how many other classes it directly depends on. A class is dependent if it uses another’s methods or fields, or is a subclass.

A high CBO score is a direct indicator of architectural fragility. It means a class has too much knowledge of the internal implementation of other parts of the system. This creates a rigid network of dependencies that makes change slow, expensive, and high-risk. Every modification requires developers to trace and test numerous interconnected components.

The Real-World Cost of High Coupling

High coupling directly translates into missed deadlines and budget overruns. In legacy systems, studies have shown that once a class’s dependency count surpasses nine, its failure rate during modernization increases by 40%. Our analysis of over 300 enterprise Java applications found that most legacy systems average 12-15 dependencies per class, placing them in a high-risk category. For more detail, Microsoft’s official documentation on class coupling provides a technical explanation.

A high CBO score is a direct forecast of future maintenance costs. It is the primary reason a seemingly simple feature request in a legacy system can turn into a three-month project for an engineering team.

Let’s examine a concrete example of this problem and its solution.

Code Example: From High to Low Coupling

This is a common pattern in older codebases, where objects are tightly interconnected, creating a fragile system.

Before Refactoring (High Coupling)

Here, a PriceCalculator directly accesses the internal fields of an Order object.

// Tightly coupled design

public class Order {

public double itemPrice;

public int quantity;

public double discount;

// ... other public fields are exposed

}

public class PriceCalculator {

public double calculateTotal(Order order) {

// The calculator is hard-wired to the internal state of Order

double basePrice = order.itemPrice * order.quantity;

return basePrice - order.discount;

}

}This design is brittle. The PriceCalculator depends on the specific field names within the Order class. If a developer renames itemPrice to unitPrice, the PriceCalculator will break, causing build failures and work stoppages.

After Refactoring (Low Coupling)

The solution is to introduce an interface that decouples the two classes. They now communicate through a stable, abstract contract instead of directly accessing implementation details.

// Loosely coupled design using an interface

public interface IOrder {

double getBasePrice();

double getDiscount();

}

public class Order implements IOrder {

private double itemPrice;

private int quantity;

private double discount;

// The logic is now owned by the Order class

@Override

public double getBasePrice() {

return this.itemPrice * this.quantity;

}

@Override

public double getDiscount() {

return this.discount;

}

}

public class PriceCalculator {

public double calculateTotal(IOrder order) {

// Now it only depends on the contract, not the concrete class

return order.getBasePrice() - order.getDiscount();

}

}Now, PriceCalculator is unaware of how an Order calculates its price or stores data; it only knows the IOrder interface. The internal implementation of the Order class can change, but as long as it adheres to the contract, PriceCalculator remains unaffected. This is a core principle for building stable systems and addressing coupling and cohesion in legacy code.

How to Measure Coupling and Cohesion in Legacy Code

To de-risk modernization, engineering leaders must transform the abstract concepts of “coupling” and “cohesion” into quantitative data. This is not about generating reports; it’s about establishing a baseline for your technical debt to make data-driven decisions on where to allocate refactoring resources.

Static analysis tools provide this quantitative insight. They scan source code without executing it, mapping the complex web of dependencies that has developed over years and identifying hidden structural issues.

For most teams, the workflow for analyzing coupling and cohesion in legacy code is direct.

Setting Up a Measurement Baseline

The first step is to integrate a static analysis tool into your development environment. The choice of tool depends on your technology stack, but the process is generally consistent.

- Select the Right Tool: Choose a tool that supports your legacy language. Options include SonarQube (broad language support), NDepend (for .NET), and Understand (for older languages like C++ and COBOL).

- Configure the Analysis: Point the tool at your codebase and configure the rules to calculate key metrics like CBO, LCOM, Afferent Couplings (incoming), and Efferent Couplings (outgoing).

- Run the Initial Scan: The initial analysis of a large monolithic codebase can be time-consuming. This scan builds the first dependency graph and establishes baseline metrics for every module.

- Interpret the Results: A dashboard of metrics is useless without context. The goal is to identify patterns and hotspots, not to fix every individual violation.

The diagram below illustrates the high-level process of refactoring a tightly-coupled system into a more stable, component-based architecture.

This process transforms a brittle, monolithic system into a collection of independent, maintainable components.

Practical Metrics for Assessing Legacy Code Health

This reference guide covers the most important metrics for assessing code health. Use this table to interpret tool outputs and identify warning signs.

| Metric | What It Measures | High-Risk Threshold | Common Tools |

|---|---|---|---|

| Cyclomatic Complexity | The number of independent paths through a method. Higher means more complex branching. | > 15 per method | SonarQube, NDepend, PMD, Checkstyle |

| Coupling Between Objects (CBO) | How many other classes a single class depends on. High CBO is a classic “Big Ball of Mud” sign. | > 10 | NDepend, SonarQube, Understand |

| Lack of Cohesion in Methods (LCOM) | How related the methods are within a class. A high score means the class does too many unrelated things. | > 0.8 (LCOM4) | SonarQube, NDepend, JArchitect |

| Afferent Couplings (Ca) | “Incoming” dependencies. The number of other classes that depend on this one. High Ca means high impact of change. | > 20 | NDepend, Understand, Sourcegraph |

| Efferent Couplings (Ce) | “Outgoing” dependencies. The number of classes this one depends on. High Ce means it’s fragile and hard to test. | > 15 | NDepend, Understand, Sourcegraph |

High scores in these metrics correlate directly with increased defect rates and slower development cycles.

From Metrics to Actionable Heat Maps

A raw list of LCOM or CBO scores for 2,000 classes is difficult to interpret. Visualizing this data as a “heat map” of your codebase provides clarity, immediately highlighting modules with both high coupling and low cohesion.

These hotspots are your highest-risk areas, where a small change is most likely to cause a cascade of failures. They are also likely where developers spend a disproportionate amount of time. For a deeper look at the financial impact, see our guide on the technical debt calculation formula.

Your initial refactoring efforts should be surgical strikes targeting these hotspots. Fixing a high-coupling issue in a frequently changed, business-critical module provides a far greater ROI than refactoring a stable, low-impact part of the system.

Identifying Conceptual Coupling

Static analysis tools can miss a more subtle problem: conceptual coupling. This occurs when two modules are not directly linked in code but are tied by a shared business concept. For example, a Billing module and a Shipping module might never call each other directly, but both depend on a shared understanding of a “customer address.” If the address format changes in one system, it must be changed in the other, but no static analysis tool will issue a warning.

This can be identified by examining code and development history:

- Look for shared jargon: If two supposedly unrelated modules consistently use the same specific business terms in variables, methods, and comments, they are likely conceptually coupled.

- Analyze change logs: Go into your version control history. If you consistently see the same set of files being committed together, they are coupled, regardless of what a dependency graph shows.

Identifying and breaking these hidden dependencies is as critical as fixing direct code dependencies.

Pragmatic Refactoring Patterns for Legacy Systems

After analysis, the first impulse is often to plan a “big bang” rewrite. For mission-critical systems, this is a high-risk approach. The business often cannot tolerate the risk or downtime.

A more effective path is a series of small, targeted, low-risk refactoring patterns. These are incremental changes designed to improve the most problematic parts of a codebase without halting operations. These patterns fit into the broader context of proven strategies for legacy code refactoring.

Isolate and Extract God Objects

The most common issue in legacy code is the “god object”—a large class with low cohesion that handles numerous unrelated tasks. The Extract Class pattern is the primary tool to address this.

The process is methodical:

- Identify a distinct responsibility within the god object. For instance, a

Customerclass that also validates street addresses. - Create a new, dedicated class for that responsibility, such as

AddressValidator. - Move the relevant fields and methods from the original class to the new class.

- Replace the old logic in the god object with a call to an instance of the new, focused class.

This pattern directly addresses low cohesion by decomposing a complex module into smaller, highly cohesive units that are easier to understand and maintain.

Taming Method-Level Coupling

High coupling also occurs at the method level. Methods with numerous parameters are brittle and difficult to read. The Introduce Parameter Object pattern is an effective solution. It groups related parameters into a separate data class.

Before Refactoring

public void createCustomer(String firstName, String lastName, String street, String city, String zipCode, String country) {

// ... difficult to maintain

}If a “state” field needs to be added, every call to this method across the entire codebase must be located and modified.

After Refactoring

public class Address {

private String street;

private String city;

private String zipCode;

private String country;

// getters and setters

}

public void createCustomer(String firstName, String lastName, Address shippingAddress) {

// ... cleaner and more stable

}The method signature is now cleaner and more resilient. Adding a new field to the Address class requires no changes to the createCustomer method signature, reducing the impact of the change. This small modification significantly reduces coupling.

Key Insight: The goal of refactoring is risk reduction, not perfection. Incremental patterns improve the most problematic parts of the codebase, steadily improving coupling and cohesion in legacy code without the risk of a full rewrite.

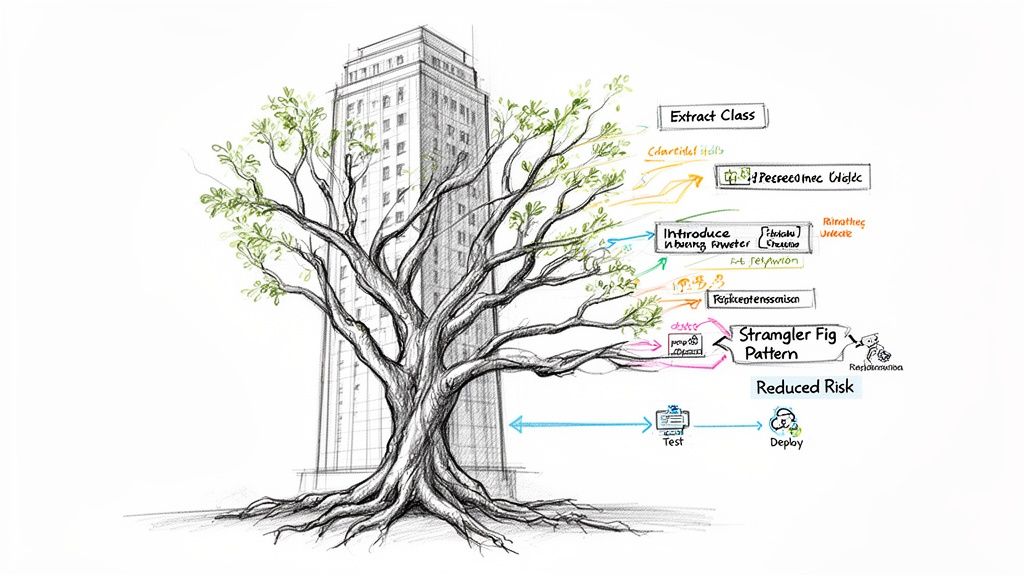

The Strangler Fig Pattern for Safe Modernization

For large, tightly-coupled components that are too risky to modify in place, the Strangler Fig Pattern is an effective strategy. The concept is to incrementally replace pieces of the old system with new, modern services until the old system is no longer needed.

The process is as follows:

- Identify a Bounded Context: Find a self-contained piece of functionality within the monolith to replace, such as an inventory management module.

- Intercept Calls: Place a proxy or routing layer in front of the legacy system. Initially, it passes all traffic to the old code.

- Build the New Service: Develop a new, loosely-coupled service that handles only the identified functionality.

- Redirect Traffic: Modify the proxy to route calls related to inventory management to the new service. All other traffic continues to the monolith.

- Repeat and Retire: Continue this process, replacing more functionality with new services. Eventually, the legacy system becomes an empty shell that can be safely decommissioned.

This approach provides a safe, gradual migration path. Our deep dive on a Strangler Fig Pattern example shows how this works in practice. It allows for incremental value delivery and technical debt reduction without the all-or-nothing risk of a large-scale cutover.

Not all modernization projects should proceed. Sometimes, the most prudent decision is to cancel a migration before it starts. The data gathered on coupling and cohesion in legacy code provides a reliable basis for this decision.

If your analysis reveals systemic, deeply-rooted structural issues, a lift-and-shift or even a phased modernization may be riskier and more expensive than a complete rewrite. At a certain point, you are not renovating a building; you are trying to salvage one with a cracked foundation.

Data-Driven Red Flags

Certain metric thresholds indicate a project’s risk of failure or significant budget overrun is unacceptably high. These should be treated as go/no-go decision points:

- Average CBO > 15: If over 50% of your codebase has an average Coupling Between Objects (CBO) score above 15, the system is not just coupled—it is architecturally rigid. Any change will trigger unpredictable, cascading failures. This makes any migration effort slow and error-prone.

- Critical Modules LCOM > 90%: When core business logic modules—such as those handling transaction processing or claims adjudication—have a Lack of Cohesion of Methods (LCOM) score exceeding 90%, it is a critical issue. These “god objects” perform dozens of unrelated functions. Attempting to untangle them during a migration is a common cause of failure.

When metrics reach these levels, a full rewrite is often the less risky and more responsible option. It allows for the design of a new system with a sound architecture from the start, rather than attempting to pay down decades of inherited technical debt.

When your metrics hit these extreme levels, modernization stops being a renovation and becomes a recovery effort. The cost and risk calculus often shifts in favor of a clean-slate approach, where you can guarantee low coupling and high cohesion from the start.

Qualifying Your Modernization Partner

Armed with this data, you can vet potential vendors more effectively. Move beyond marketing materials and ask specific questions that test their experience with complex codebases.

- “What specific tools will you use to measure coupling and cohesion in our codebase?”

- “Show us a case study where you successfully refactored a system with an average LCOM of over 85%.”

- “Walk me through your strategy for a module with a CBO of 20 that is also a business-critical dependency.”

An experienced partner will welcome these questions. Vague or evasive answers are a significant red flag.

Common Questions from the Trenches

Here are direct answers to common questions from engineering leaders applying coupling and cohesion metrics to legacy code.

What’s a “Good” LCOM Score?

A perfect Lack of Cohesion of Methods (LCOM) score is 0, meaning every method in a class operates on the same set of data. This is rare in practice.

A pragmatic target for a healthy class is an LCOM score below 25%. A score above 50% is a serious warning. A business-critical module with a score over 75% indicates a severe technical debt problem that should be prioritized for refactoring.

Can We Just Buy a Tool to Fix This?

No. Tools like SonarQube or NDepend are diagnostic instruments, not automated solutions. They are effective at identifying and measuring coupling and cohesion issues, creating a “heat map” of problematic areas. However, they cannot fix the underlying design flaws.

Fixing these issues requires an understanding of the business logic—context that automated tools lack. Use these tools to identify hotspots, then assign senior engineers to apply strategic refactoring patterns where they will have the most impact.

Is High Coupling Ever Okay?

While low coupling is generally the goal, there are niche scenarios where it is a pragmatic trade-off. For example, a set of classes that implement a single, complex financial calculation will necessarily be tightly coupled to each other.

The key is containment. This tight coupling must be confined within a clear, logical boundary—a self-contained module. As long as the module as a whole remains loosely coupled from the rest of the system, the architectural integrity is maintained. The danger arises when high coupling spreads throughout the application.

Where Do We Start Refactoring?

Do not simply refactor the code with the worst metrics. A poorly designed module that is stable and rarely changed offers a low ROI for refactoring. It may be suboptimal, but if it is not causing problems, it should be a low priority.

Instead, focus on the intersection of technical debt and business impact. Look for modules that meet two criteria:

- Poor technical scores (high CBO, high LCOM, high cyclomatic complexity).

- High business impact (frequently changed, critical for revenue, or a source of production support tickets).

Targeting these areas first ensures that refactoring efforts are directed at changes that improve team velocity and system stability.

At Modernization Intel, we provide the unbiased data you need to make these critical decisions. Our platform offers deep intelligence on 200+ implementation partners, so you can see their real costs, failure rates, and specialization before you sign a contract. Get your vendor shortlist and avoid costly modernization mistakes.

Find out more at https://softwaremodernizationservices.com.