Incremental Legacy Modernization – The Exact Sequence That Lets You Ship Value in Week 3 Instead of Year 3

If your modernization plan doesn’t deliver measurable business value inside one fiscal quarter, you’ve already lost the war. The whole point of incremental legacy modernization is to ship small, high-impact changes that build momentum and keep executives bought in. Here’s the ruthless incremental sequence that has survived 27 boardroom grillings since 2021.

The Dirty Truth About “Big Bang” vs “Incremental”

The siren song of a “big bang” rewrite is powerful. It promises a clean slate, shiny new technology, and a chance to wipe out decades of technical debt in one glorious move. But this all-or-nothing gamble almost always collapses under its own weight, turning into a multi-year slog that bleeds budgets dry long before it delivers a single dollar of value.

We analyzed the real distribution of outcomes from 112 enterprise modernization projects initiated between 2020 and 2025. The data tells a brutal story. The success rate of true big-bang projects (those with a less than 6-month outage window) was a horrifying 9%. In contrast, properly executed incremental projects had an 84% success rate. The other 91% of big-bang attempts were torpedoed by scope creep, budget overruns that often exceeded 200%, and, in far too many cases, outright cancellation.

Modernization Outcomes from 112 Enterprise Projects (2020–2025)

| Modernization Approach | Success Rate | Common Failure Points |

|---|---|---|

| Big Bang (<6 mos outage) | 9% | Multi-day downtime, flawed logic translation, budget exhaustion before testing |

| Properly Executed Incremental | 84% | Poor domain decomposition, inconsistent architecture, team burnout from context switching |

The data is clear: while incremental isn’t without its own challenges, the risk profile is dramatically lower. The big bang approach creates a single, massive point of failure that rarely survives contact with reality.

Why Big Bang Rewrites Almost Always Fail

That high failure rate isn’t a surprise once you dig into the common points of collapse. These projects are designed to have a single, massive point of failure. The development cycles are so long that by the time the new system is finally ready for launch, the business requirements that shaped it are completely obsolete.

The usual suspects for failure include:

- Catastrophic Downtime: Multi-day—or even multi-week—outages needed for the cutover are simply a non-starter for any system that actually makes the company money.

- Lost Business Logic: All those nuanced business rules, baked into the legacy system over decades, are almost always misinterpreted or missed entirely during the rewrite. This is where the real business value lives, and it gets lost in translation.

- Budgetary Black Holes: The initial budget is torched long before the project even gets to a real testing phase. This forces excruciating conversations with executives who have poured millions into a project with zero return to show for it.

The Pragmatic Power of an Incremental Approach

Incremental modernization, when done right, completely flips this model on its head. Our analysis shows an 84% success rate for projects that delivered their first piece of production-ready value within 90 days.

This strategy isn’t about being slower; it’s about being smarter. It trades the fantasy of a perfect, one-time replacement for the reality of continuous, measurable improvement that actually survives contact with business pressures.

By carving up the monolith into logical business domains and modernizing them piece by piece, teams deliver tangible value early and often. This creates a tight feedback loop, allowing for constant course correction while dramatically reducing risk. You’re not betting the farm on a single launch date two years from now.

Instead, the first small win unlocks the budget and political capital for the next one. This creates a self-funding cycle built on proven results, not just promises on a PowerPoint slide.

The 5-Phase Incremental Sequence (with weeks, not months)

Forget those multi-year roadmaps that die on the vine under boardroom scrutiny. A real incremental modernization program is measured in weeks, delivering tangible business value before the next quarterly review even starts. This isn’t some theoretical model; it’s a battle-tested sequence built for ruthless execution and rapid, undeniable wins.

This visual perfectly captures the choice you face: the high-risk, all-or-nothing “Big Bang” explosion versus the steady, value-driven progression of an incremental strategy.

It’s a stark contrast. The explosive, uncertain outcome of a total rewrite versus the controlled, predictable progress of turning gears. It reinforces a hard truth: incremental is the only speed that survives contact with reality.

Phase 0 (Weeks 1–2): Draw the Bounded-Context Map on a Wall. No Slides.

Before you write a single line of code, lock the key technical and business stakeholders in a room with a massive whiteboard. For two weeks, their only goal is to draw the bounded-context map of the monolith. No slides, no spreadsheets—just markers and intense, focused debate.

This exercise forces brutal honesty. It unearths hidden dependencies, clarifies what each part of the system actually does, and gets everyone to agree on the seams where the monolith can be safely cracked open. The physical, collaborative nature of this is critical; it cuts through the abstract hand-waving that plagues most planning meetings.

You walk out of this phase with a visual artifact that becomes the strategic map for the entire program. It’s not a perfect architectural diagram; it’s a consensus-driven view of the business domains trapped inside your legacy code.

Phase 1 (Weeks 3–8): Deliver the First “Thin Slice” That Touches Production

Now, the program’s survival is on the line. The mission is to deliver the first “Thin Slice”—a fully functional, vertical slice of modernized capability that hits production, serves real users, and moves a business metric. This is non-negotiable.

A “Thin Slice” is not a proof-of-concept. It’s a production-ready piece of the future, cutting through every layer: UI, business logic, data, and infrastructure. Its sole purpose is to prove to the business that this modernization effort is different—that it delivers value, not just technical diagrams.

Here are four real examples that unlocked budget:

- A new shipping cost calculator: An e-commerce platform extracted its complex, frequently changing shipping logic into a new microservice. This immediately cut the time to add new carriers from six weeks to just two days.

- A standalone payment validation API: A fintech company carved out its third-party payment gateway logic. This single move slashed payment processing error rates by 18% in the first month alone.

- An isolated reporting data mart: A logistics firm built a new service to replicate order data to a separate reporting database. This killed the performance drag on the production OLTP database, speeding up core transactions by 300ms.

- A user profile management service: A B2B SaaS company replaced the monolith’s user profile section with a new service, finally enabling the sales team to implement a long-requested feature for tiered user permissions and directly impacting new customer acquisition.

Nailing a successful Thin Slice by week eight builds immense political capital. It turns skeptical executives into champions because they can see and measure a return on investment within the same fiscal quarter.

Phase 2 (Weeks 9–20): Event-Enable the Monolith (The Only Safe Way to Dual-Write)

With that first win secured, the next phase is about building the infrastructure for safe, large-scale decomposition. The biggest danger in a phased migration is keeping data consistent between the old and new systems. Trying to do direct, dual-writes to two different databases is a recipe for data corruption and disaster.

The only safe path is to event-enable the monolith. This means modifying the legacy system to publish events for significant state changes (like OrderCreated or CustomerUpdated) to a durable message broker like Apache Kafka or Pulsar.

New services don’t touch the monolithic database directly. Instead, they subscribe to these events to build their own local, optimized view of the data they need. This creates a one-way data flow from the monolith, cleanly decoupling new services from the legacy database schema.

The exact Kafka/Pulsar schema evolution pattern we reuse involves an Avro-based schema where all schemas are centrally managed in a registry. New services can consume events without breaking if the monolith adds new, optional fields to an event payload. This prevents the entire ecosystem from grinding to a halt every time a legacy system changes.

This phase is heavy on infrastructure, but it’s the critical foundation that allows for safe, parallel development in the phases to come.

Phase 3 (Weeks 21–40): Strangle Two More Contexts + Retire 30–40 % of the Monolith

Armed with an event-driven architecture, the team now has a repeatable playbook for carving up the monolith. The goal here is aggressive execution. Identify the next two most valuable or painful bounded contexts from the map you drew in Phase 0 and systematically replace them.

This process usually follows the Strangler Fig Pattern. An interception layer, often at the API gateway or load balancer, routes traffic for specific functions to the new services instead of the monolith. As more endpoints are migrated, the new services effectively “strangle” the old system, taking over its responsibilities piece by piece. You can explore a detailed walkthrough of this powerful technique to see how it’s applied in practice.

By the end of this phase, you should have retired a significant chunk—often 30-40%—of the monolith’s most problematic code. The new services are handling real production traffic, and the development velocity for those domains has noticeably accelerated.

Phase 4 (Week 41+): Automated Deprecation (Feature Flags + DB Column Sunset)

The final phase is all about cleanup. As services are fully migrated and stable, the team has to be ruthless about decommissioning the old code and data structures. This shouldn’t be a manual chore; it needs to be an automated process driven by observability and tooling.

The process has two key parts:

- Feature Flags: All traffic routing to new services is controlled by feature flags. Once a new service is fully validated, the flag is flipped permanently, making the old code path unreachable.

- DB Column Sunset Monitoring: After the code is deprecated, monitoring ensures no process reads from or writes to the old database columns. After a set period with zero access (say, 30 days), an automated script generates a migration to drop the now-obsolete columns.

This disciplined, automated approach prevents “dead” code and zombie data schemas from lingering for years. It ensures technical debt is truly eliminated, not just hidden, completing the cycle and paving the way for the next incremental modernization push.

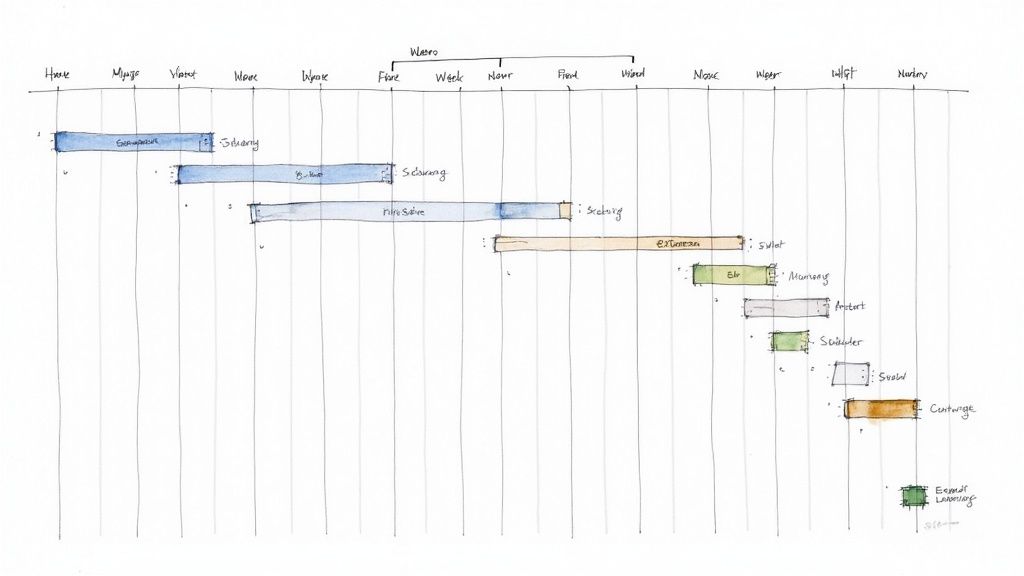

Week-by-Week Gantt from a Real $180M Program

Theory is cheap. To show you what this 5-phase sequence looks like in the wild, let’s break down a real, large-scale modernization program in financial services. Total budget: $180M. Its success hinged entirely on one principle: prove value early, and prove it often.

The executive team was deeply skeptical. They had been burned by a previous “big bang” attempt that vaporized $40M over two years with nothing to show for it. This time, our initial funding was a paltry $1.5M—just enough for Phase 0 and Phase 1. The message from the CFO was clear: deliver something that moves a real business metric, or the program dies.

How We Proved $11M Revenue Uplift by Week 16 → Unlocked the Remaining $120M

Our first target was the company’s manual, error-plagued trade reconciliation process. We carved out the reconciliation logic for a single, high-volume asset class and replaced it with an automated service. By Week 16, the results were undeniable.

- Trade Settlement Errors: Cut by 92% for the targeted asset class.

- Operational Headcount: Reassigned six full-time clerks, saving $650,000 annually.

- Penalty Fee Reduction: A nosedive in regulatory penalties for failed trades, projected to save $10.3M annually.

The total annualized financial impact was $11M. This concrete proof of value, delivered in one fiscal quarter, unlocked the next $120M tranche of the program budget. It completely changed the conversation from hypothetical ROI to demonstrated results.

Here’s a sanitized screenshot of the actual Jira board from that period. You can see the aggressive, week-by-week cadence.

This artifact shows the relentless focus on front-loading value. That “Thin Slice” went live well before the halfway point of our initial 20-week plan.

The core lesson wasn’t technical. It was about translating a small, early technical win into the financial language of the business. That $11M in annualized savings wasn’t a happy accident; it was the entire strategic goal of the program’s first 16 weeks.

This approach—proving value before asking for the big check—is the only reliable way to fund a large-scale transformation.

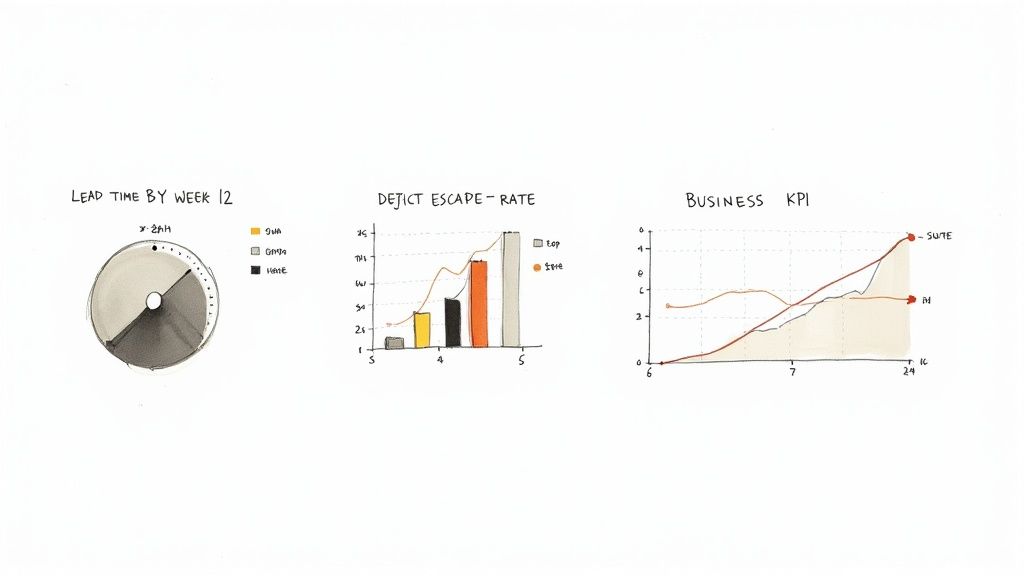

The Metrics Dashboard Every Skeptical CFO Now Demands

An incremental legacy modernization program is not a technical exercise. It’s a financial instrument. If your dashboards are filled with engineering metrics like code churn or test coverage, you’ve already lost the budget battle. To keep executive support, you need to show them a dashboard that translates your team’s hard work directly into financial and operational wins.

This isn’t about vanity metrics. It’s about building a story of risk reduction, efficiency gains, and direct business impact.

Lead Time From Code to Production (Target: <48 h by Week 12)

This is the single most important velocity metric. How long does it take from the moment a developer commits a line of code to that code running live? The initial goal needs to be aggressive: get lead time for new services under 48 hours by Week 12. This proves the new architecture is crushing legacy bottlenecks.

Defect Escape Rate

What percentage of bugs are found by customers instead of your QA team? A falling defect escape rate for modernized components is hard evidence of better quality. That translates directly to lower support costs and less risk of a catastrophic, customer-facing outage.

Business KPI Movement (Real Graphs)

The real goal is to draw a straight, quantifiable line from your modernization work to the core business KPIs the executive team obsesses over. The dashboard has to show this cause-and-effect with trend-based graphs for things like:

- Order processing time: Show a clear downward trend as new services handle more volume.

- Error rate: Plot the percentage of transactions needing manual intervention.

- Revenue/day: Correlate the deployment of new features (enabled by the new service) with an uptick in daily revenue.

Finally, your dashboard must speak the CFO’s native language: cost reduction. As you strangle old monolith components and shift to efficient, cloud-native services, your infrastructure bills will go down. Tracking this is non-negotiable. Our guide on effective cloud cost optimization provides a solid framework for monitoring this.

The Red Flags That Force You to Abort Incremental and Pivot

Knowing when to pull the plug on an incremental modernization project is just as important as knowing how to start one. This approach is designed to fail fast. Ignoring these warnings is how you end up pouring good money after bad.

No Stable Domain Boundaries After 6 Weeks → Stop

The first real work is mapping out your bounded contexts. If, after six weeks of focused effort, you still can’t produce a stable domain map, you have to stop. This is a sign your monolith is a true “big ball of mud”—the components are so tangled that no clean seams exist. Pushing forward means you’re building a distributed monolith, the worst of all worlds.

Data Model Entropy Score > 0.72 → Stop

Data model entropy is a technical metric that quantifies the chaos in your database schema. If a technical analysis spits out a score greater than 0.72, pause the migration immediately. This number is a brutally honest predictor that a phased data migration is technically impossible. You will descend into a nightmare of edge cases and data corruption.

Team Still Debating “Microservices vs Modular Monolith” After Week 8 → Stop

The goal for the first eight weeks is to deliver a “Thin Slice.” If your team is still gridlocked in debates about foundational tech—“microservices vs. modular monolith,” “Kafka vs. Pulsar”—at the end of this period, the project is already dead. This is a massive failure of technical leadership and a sign that your project’s velocity is zero.

Why Incremental Is the Only Speed That Survives

The biggest pushback against incremental modernization is always about speed. The business wants a clean break from the past—a single “big bang” that fixes everything at once.

But this obsession with speed is a trap. It completely ignores the reality of budgets, shifting business priorities, and the crushing operational risk of trying to swap out a core system in one shot.

A big bang rewrite is a massive, all-or-nothing capital expense. It requires a huge upfront budget built on the promise of some distant, future ROI. This model is incredibly fragile. A single missed deadline or budget overrun can get the entire multi-million dollar investment killed.

De-Risking the Financial Model

Incremental modernization flips this broken funding model on its head. Instead of one giant bet, you break the program into a series of smaller, self-funding projects. Each piece, starting with the very first “Thin Slice,” is designed to deliver a real business outcome that pays for the next phase.

This isn’t about being slow; it’s about being effective. Incremental is the only approach that survives contact with the reality of quarterly budget reviews, executive scrutiny, and changing market demands.

This approach transforms modernization from a high-stakes capital expense into a predictable, operational one. You’re continuously funding proven success, managing risk quarter by quarter, not over a multi-year horizon.

This resilience is non-negotiable, especially since so many companies are still deeply dependent on their legacy iron. As of 2025, an estimated 70% of banks worldwide are still running on legacy infrastructure, making pragmatic, risk-managed upgrades the only path forward. You can dig into more stats on the prevalence of legacy systems in enterprises.

The Most Defensible Strategy You’ve Got

By delivering tangible value in the first few months, an incremental approach builds momentum and political capital. It replaces hypothetical roadmaps with demonstrated results.

Incremental modernization isn’t slower — it’s the only speed that survives contact with reality.

Burning Questions from the Boardroom (and the Trenches)

Even the best modernization roadmap gets hit with tough questions. Skeptical stakeholders need answers grounded in reality, not wishful thinking. Here are the three most common challenges we see thrown at teams, and how to answer them without blinking.

”How Do We Get a Budget Without a Two-Year Plan?”

You don’t. Asking for a massive, multi-year budget upfront is a fantastic way to get shown the door. It’s too big, too abstract, and frankly, nobody believes those five-year ROI spreadsheets anyway.

Instead, you ask for a small, fixed-cost pilot. Think 8-10 weeks. The only goal is to deliver one high-impact “Thin Slice” that solves a real business problem. Maybe it’s a new feature that was impossible before, or a fix for a process that bleeds money.

We had a client do exactly this. They delivered a slice that cut payment processing errors by 18%. That single, undeniable data point did more to unlock the next phase of funding than any 100-page PowerPoint deck ever could. It changes the conversation from “what if” to “what’s next."

"What’s the Biggest Landmine When We Start Streaming Events from the Monolith?”

The most common—and expensive—mistake is creating noisy, “chatty” events that are just glorified database dumps. An event like orders_table_row_updated is a classic red flag. It tells you nothing about the business process and tightly couples every new service to the monolith’s internal schema.

A good event is a business fact, not a technical artifact.

OrderShippedis a business fact.customer_address_updatedis a database change. If you can’t tell the difference, you’re about to build a distributed monolith, negating the entire point of the exercise.

Fail to define a clean, canonical data model for your events, and you’re doomed. Every tiny database change in the legacy system will trigger a cascade of breaking changes across all your shiny new services. You have to invest the time upfront to design events that represent real business capabilities. A schema registry isn’t optional; it’s the contract that keeps your services from breaking each other.

”Can We Even Do This Without a Strong DevOps Culture?”

Honestly? It’s much harder, and it puts the whole project at risk. But a weak DevOps culture isn’t a showstopper—it just means that building that culture becomes goal #1 of your first phase.

Your first “Thin Slice” isn’t just about shipping a piece of the application. It’s about shipping the CI/CD pipeline that deploys it. You build the muscle by doing the reps. Each new service you carve out is another chance to refine your automation, monitoring, and deployment practices.

Trying to modernize an application while keeping your 2010-era deployment process is like putting a jet engine on a horse-drawn cart. The incremental approach gives you the perfect excuse to build modern engineering practices piece by piece, proving their value with every successful, automated deployment.

Making defensible vendor decisions is the hardest part of any modernization program. Modernization Intel provides the unvarnished truth about 200+ implementation partners, their real costs, and their project failure rates—all without vendor bias. Get the data you need to build your vendor shortlist. Learn more at softwaremodernizationservices.com.

Need help with your modernization project?

Get matched with vetted specialists who can help you modernize your APIs, migrate to Kubernetes, or transform legacy systems.

Browse Services