API-Led Connectivity for Legacy Systems: A Pragmatic Approach

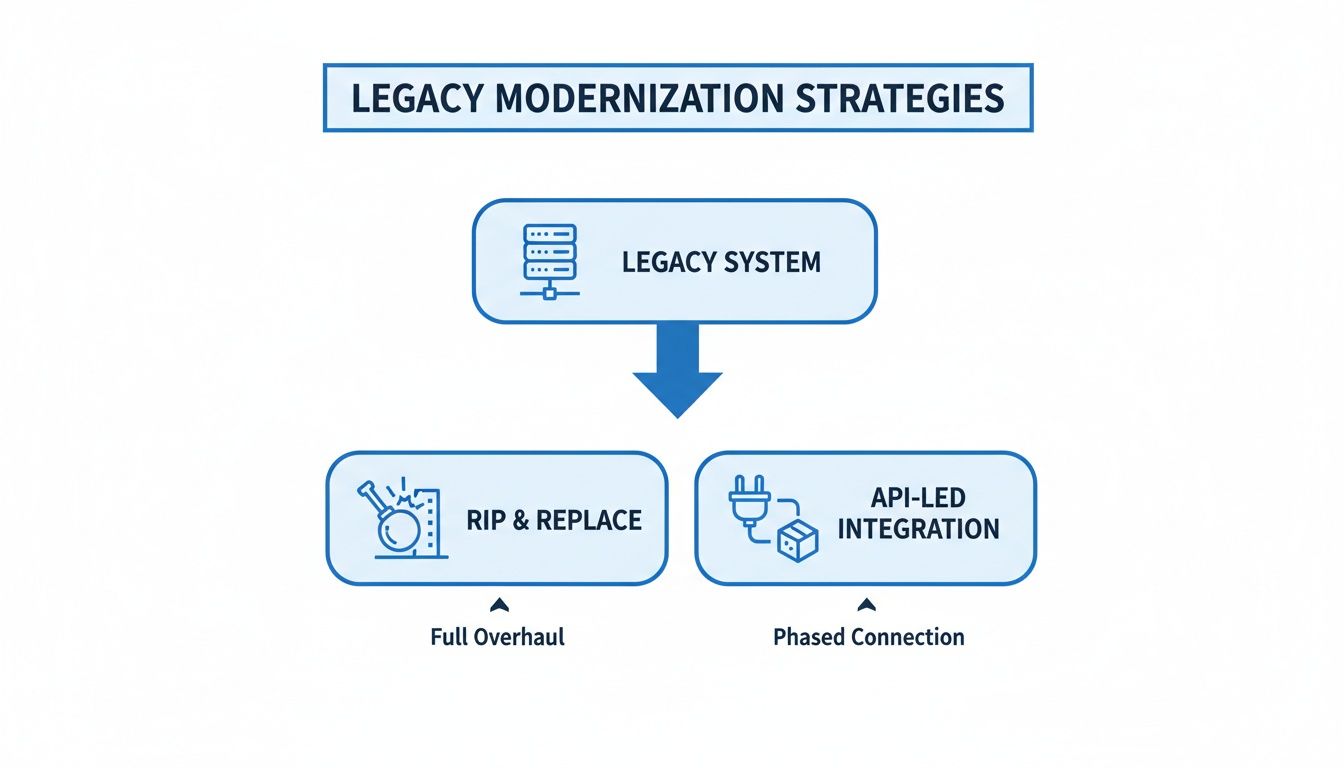

For decades, the standard playbook for legacy modernization was “rip-and-replace.” It’s a compelling narrative—out with the old, in with the new—but data shows it’s a flawed strategy for most organizations.

Industry analysis indicates that over 60% of large-scale modernization projects fail to meet their objectives. The primary reason is a consistent underestimation of the complexity embedded in legacy systems and the significant business disruption involved. API-led connectivity offers a pragmatic alternative, allowing organizations to unlock value from legacy systems without the high-stakes risk of a complete overhaul.

The Reality of Modernizing Legacy Systems

The push to replace legacy systems is understandable. Monolithic architectures, often running COBOL on a mainframe, can be perceived as an impediment to agility. However, these systems frequently contain decades of battle-tested business logic that is critical to operations.

A full rewrite attempts to replicate this institutional knowledge from scratch, a task that often leads to budget overruns exceeding 200%. The high failure rate necessitates a different approach. Instead of demolishing a structurally sound system, API-led connectivity installs modern, standardized interfaces—APIs—to make its most valuable assets accessible. This preserves the core while enabling new development.

To understand the practical implications, let’s compare the two approaches.

Modernization Approaches Reality Check

This table compares the typical outcomes of a full rewrite versus a strategic, API-led approach, based on documented project results.

| Metric | Full Rewrite / ‘Rip & Replace’ | API-Led Connectivity |

|---|---|---|

| Upfront Risk | High. A multi-year, multi-million dollar commitment with a single point of failure. | Low. Incremental investment, delivering value in focused sprints. |

| Business Disruption | High. Requires cutover weekends, extensive retraining, and parallel run costs. | Minimal. New services are built alongside existing systems, often with zero downtime. |

| Time to Value | 2-5 Years. ROI is typically not realized until project completion, if ever. | 3-6 Months. The first valuable API can be delivered and iterated upon quickly. |

| Budget Predictability | Poor. Prone to 200%+ overruns as unforeseen complexities are discovered. | High. Funded incrementally based on clear, achievable milestones. |

| Core Logic Preservation | Zero. Attempts to recreate decades of business rules from documentation and institutional memory. | 100%. Treats the legacy core as a stable, reliable system of record. |

The data suggests one path is a high-risk revolution, while the other is a controlled, phased evolution.

Preserving Value Through Strategic Integration

API-led connectivity is not about keeping old technology on life support indefinitely. It is about building a strategic bridge that decouples stable, slow-moving core systems from the fast-paced digital experiences that modern applications require.

This method, often part of incremental legacy modernization strategies, allows organizations to continue deriving value from previous technology investments. For example, modern ITSM platforms can be layered on top of older IT infrastructure, transforming service management without altering the core systems, as demonstrated when modernizing enterprise operations with ITSM Freshservice.

The approach treats legacy assets as reliable systems of record and wraps them in secure, reusable System APIs. These APIs function as translators, converting legacy protocols and data formats into standard REST/JSON that modern applications consume.

The Economic Shift Toward APIs

The financial case for this model is significant. The global network API market was valued between USD 1.55-1.96 billion in 2024. Projections show this market expanding to over USD 47 billion by 2033, indicating a major enterprise shift toward API-driven integration. You can read the full research on the network API market on straitsresearch.com.

API-led connectivity has transitioned from a niche integration technique to a mainstream enterprise strategy. It acknowledges that effective modernization hinges on intelligent integration, not wholesale replacement. For any CTO managing critical, aging infrastructure, it represents a more realistic path forward.

This market growth reflects a mature understanding that the value is not in replacing the legacy core, but in making it securely and efficiently accessible. For leadership, this means modernization can be funded incrementally, delivering measurable business value at each stage rather than waiting years for a big-bang project to deliver on its promises.

Deconstructing The Three-Layer API Architecture

Effective API-led connectivity is not an ad-hoc creation of endpoints. It is a disciplined architectural approach built on a three-layer model.

Each layer has a distinct function. Together, they create a separation between slow-moving, fragile legacy systems and fast-moving, demanding applications. Correctly implementing this separation is critical to avoiding the creation of a brittle, point-to-point architecture similar to the one being replaced.

Instead of the high-risk ‘rip and replace’ gamble, this approach offers a structured, incremental path to modernization.

This is not a temporary fix. It is a deliberate architectural choice that protects the value locked in the legacy core while enabling innovation on top of it.

Layer 1: System APIs — The Foundation

System APIs form the base of this model. They are secure wrappers around core systems of record. Their sole function is to expose data and processes from legacy platforms—such as a CICS transaction on a mainframe or a query against an AS/400 database—in a modern, standard format.

A System API abstracts the complexity of a legacy system. It might take a proprietary request formatted as a COBOL copybook and translate it into a standard REST/JSON format. This abstraction shields all other developers from needing to understand the internal workings of the legacy system.

The only purpose of a System API is abstraction. It hides the implementation details of the legacy system behind a clean, consistent interface. This layer should contain zero business logic. It just unlocks the data.

For instance, a COBOL program that retrieves customer details can be wrapped by a System API. The API call is a simple HTTP GET request, but behind the scenes, it triggers the mainframe transaction and translates the output. Creating a library of reusable System APIs builds a stable foundation that can serve the business for years, regardless of how front-end technology evolves.

Layer 2: Process APIs — The Logic Engine

While System APIs expose raw data, Process APIs contain the business logic. This middle layer orchestrates and combines data from multiple System APIs to execute a specific business process.

A common example is an “Onboard New Customer” process. This business action requires coordinating several steps across different backend systems:

- Call the Customer System API to check if the user already exists in the CRM.

- Invoke the Credit Check System API to query an external credit bureau.

- Use the Address Verification System API to validate their shipping address.

- Call the Billing System API to create their new account.

The Process API manages this sequence, applies business rules, handles errors, and composes the final result. It is agnostic about the consumer, exposing a generic “Onboard Customer” capability that could be used by a web portal, a mobile app, or an internal Salesforce tool.

Layer 3: Experience APIs — The Consumer Interface

The top layer consists of Experience APIs. These are designed for the specific needs of a single end-user application or channel. They shape data into the exact format a front-end application needs to consume it efficiently.

Consider a mobile banking app. An Experience API for that app might combine data from a “Get Account Balance” Process API and a “Get Recent Transactions” Process API into a single, optimized response. This prevents the mobile app from making multiple round-trip calls over a cellular network, improving performance and user experience.

This three-layer architecture provides true decoupling.

Front-end developers can work independently, served by their dedicated Experience API, without needing to understand the complexities of the mainframe or the orchestration logic in the Process layer. This separation enables the modernization of user experiences without destabilizing core systems.

Building a Defensible Business Case and ROI

Technical leaders cannot justify modernization based on architectural purity alone. A significant investment touching core legacy systems requires a business case grounded in financial reality.

The diagrams of API-led connectivity must translate into a clear Return on Investment (ROI) to secure funding and executive support.

The financial argument is based on three pillars: accelerated time-to-market, de-risking core operations, and incremental funding. Unlike a “big bang” rewrite that consumes capital for years before delivering value, an API-led approach can deliver measurable results in months.

Instead of requesting a $5M-$10M investment for a full system replacement with a multi-year payback period, a more strategic proposal can be made. A smaller initial investment can unlock a single, high-value piece of data. Successful delivery demonstrates impact and builds momentum for further funding.

Analyzing the Cost-Benefit Equation

A realistic analysis must consider both the investment required and the potential losses from inaction. The initial effort to wrap a legacy system is not free, but it is typically a fraction of the cost and risk of a full rewrite.

The financial model shifts from a large, monolithic project budget to a series of smaller, predictable investments.

Key cost factors to include in the model are:

- API Management Platform Licensing: Tools like MuleSoft or Apigee have annual subscriptions, often based on API volume. These platforms provide security, governance, and analytics capabilities that would be costly to build in-house.

- Specialized Developer Skills: Building secure, reusable, and scalable APIs requires different skills than traditional application development. The cost of specialized talent or a qualified partner should not be underestimated.

- The Initial Wrapping Effort: The complexity of the legacy system is a major variable. Wrapping a well-documented CICS transaction is different from wrapping an undocumented, 30-year-old database schema. A detailed legacy system risk assessment is essential for accurate forecasting.

These costs must be weighed against the benefits of increased agility and the protection of fragile core systems.

A Practical Cost Model Comparison

Consider a hypothetical insurance company needing to expose policy data from their mainframe to a new partner portal.

| Metric | Full System Rewrite | API-Led Connectivity |

|---|---|---|

| Initial Investment | $5M - $10M | $75K - $150K (for the first complex System API) |

| Time to First Value | 2 - 4 Years | 3 - 6 Months |

| Risk of Total Failure | High (>60%) | Low (contained to a single API project) |

| Funding Model | Large, Upfront Capital | Incremental, Operational |

The API-led approach allows for value demonstration at each step. Once the first System API for policy data is delivered, the ROI from that project justifies funding the next. This creates a self-funding modernization model aligned with agile business practices.

This incremental model is more attractive to a CFO, transforming modernization from a high-stakes corporate gamble into a predictable, value-driven program.

Each new API becomes a reusable asset, compounding the ROI over time as more projects utilize it. The business case evolves from cost avoidance to building a foundation for future innovation.

Why Most API-Led Modernizations Underperform

Adopting API-led connectivity is not a guaranteed solution for legacy systems. While the architectural diagrams and vendor promises are compelling, many of these initiatives fail to deliver the expected ROI.

Analysis shows the problem is rarely the three-layer model itself. The technology is sound. Failure almost always stems from organizational missteps that undermine the architecture.

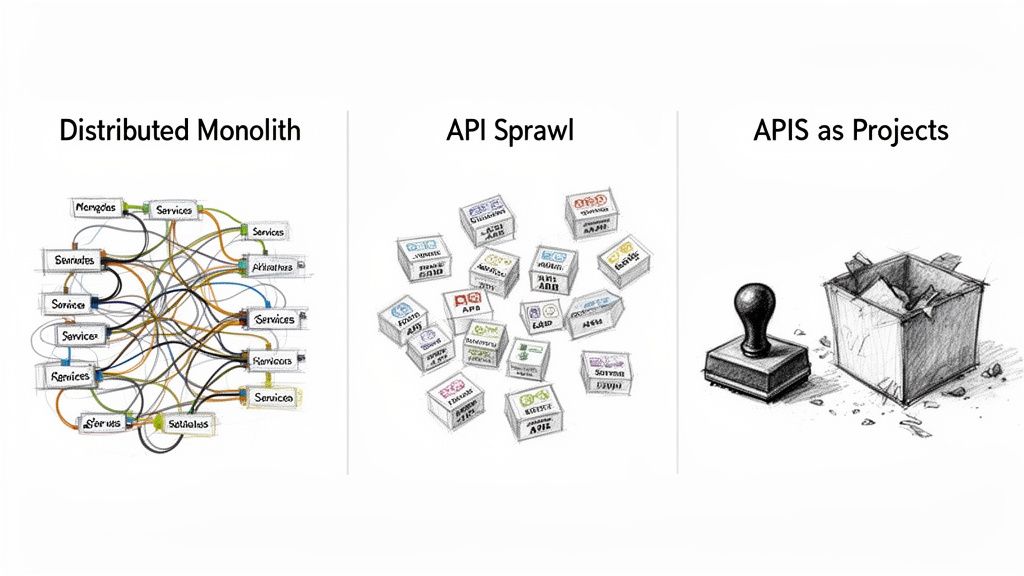

Teams often focus on the syntax—building System, Process, and Experience APIs—but miss the fundamental mindset shift required. The result is often a new set of services that are as rigid and siloed as the monolith they were intended to replace, leading to increased complexity instead of agility.

Here are three common failure modes and how to avoid them.

Creating a Distributed Monolith

The most common trap is the creation of a distributed monolith. This occurs when teams wrap old systems with System APIs but then create a web of direct dependencies between the new services. While it may appear to be a modern, distributed system, it is brittle and tightly coupled.

For example, an “Order Status” Experience API makes a direct call to a “Customer Details” System API. If a change is needed in the customer data format, that single change can break the Order Status API and potentially other services that are hard-coded with the same dependency.

This does not solve the original problem; it replaces one large monolith with multiple smaller ones spread across a network, which are often more difficult to debug.

The goal of API-led connectivity is decoupling, not just distribution. If changing one API requires redeploying five others, you have not achieved decoupling. You have only made your monolith more complicated.

The solution is to ensure that Process APIs are the only layer that orchestrates calls between System APIs. This enforces a clean separation of concerns and insulates core system logic from changes in other domains.

Lacking a Central API Governance Model

Without a strong governance model, an api-led connectivity legacy initiative can lead to “API sprawl.” When individual teams build APIs without central oversight, the results are often inefficient and inconsistent.

This lack of governance manifests in several ways:

- Duplication of Effort: Multiple teams may build slightly different versions of the same API (e.g., “GetCustomer”) because they are unaware of each other’s work.

- Inconsistent Design: APIs may have different naming conventions, authentication methods, and error codes, making them difficult for developers to consume.

- Security Vulnerabilities: Without standardized security policies, some APIs might expose sensitive legacy data with inadequate protection, creating significant compliance risks.

The solution is to establish a Center for Enablement (C4E). A C4E is a cross-functional team that creates standards, provides reusable templates, and maintains a central catalog of all available APIs. Its role is not to build everything but to enable other teams to build correctly and consistently.

Treating APIs as Projects Not Products

A critical failure is treating APIs as one-off technical projects rather than long-term business products. A project has a defined start and end date. When an API is built for a single consumer and the project is considered “done,” the API is often abandoned without clear ownership.

This project-based mindset is the enemy of reusability. An abandoned API becomes technical debt. Its documentation becomes outdated, it may miss security patches, and no one is responsible for its lifecycle.

The “API-as-a-Product” mindset is essential. This means every API must have:

- A Designated Product Manager: An owner responsible for the API’s roadmap, documentation, and developer experience.

- A Clear Service Level Agreement (SLA): Published guarantees for uptime and performance that other teams can rely on.

- Full Lifecycle Management: A defined process for versioning, deprecating, and retiring the API.

When APIs are treated as products, they become strategic assets that deliver compounding value. When treated as projects, they become a collection of unmanaged code that hinders innovation.

Choosing Your API Deployment Architecture

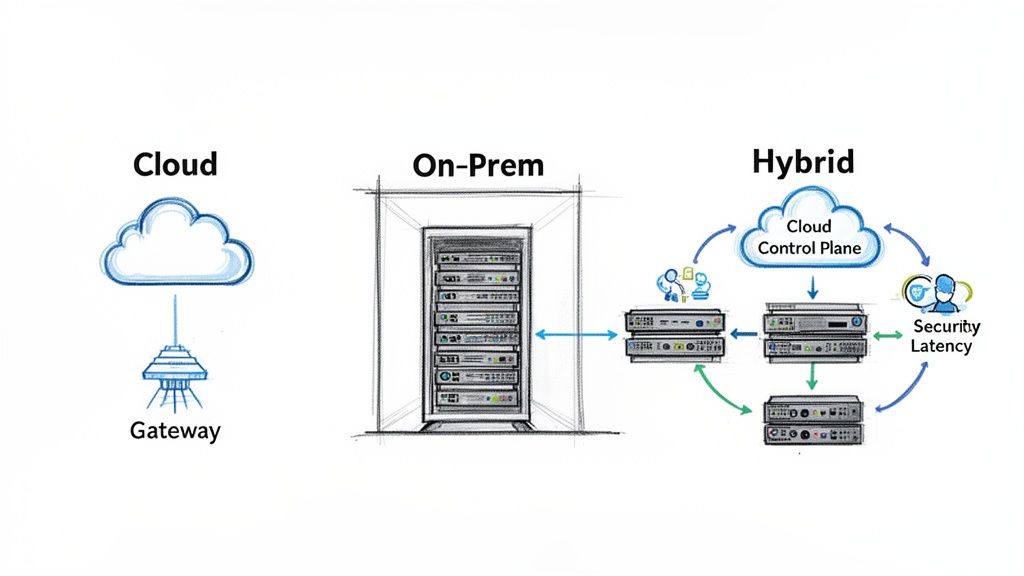

The deployment location of your APIs is as important as their design. For organizations with legacy systems, the decision about the deployment architecture for API gateways is a strategic one with significant implications for security, compliance, and budget.

While a “cloud-first” approach is often promoted, it can be an oversimplification for companies with stable, on-premise infrastructure. The choice is between three models: on-premises, cloud-native, and hybrid. The selection will impact data sovereignty, latency, and regulatory compliance.

On-Premises Deployment

An on-premises deployment means hosting everything in your own data centers, including the API gateway (runtime plane) and the management console (control plane). This approach is suitable for organizations with stringent security requirements or those subject to strict regulations, as it provides complete control over security and data location.

However, this control comes at a cost. The organization is responsible for all hardware procurement, maintenance, scaling, and software patching. The total cost of ownership is typically higher, and it places a significant operational burden on infrastructure teams.

When to choose On-Premises:

- FedRAMP or ITAR Compliance: Government regulations mandate that all data processing and management remain within specific physical or national borders.

- Extreme Low-Latency Requirements: APIs must communicate with legacy systems in the same physical location with sub-millisecond response times.

- Completely Air-Gapped Environments: Systems that cannot be connected to the public internet, such as in defense or industrial control environments.

Cloud-Native Deployment

The cloud-native approach involves deploying both API gateways and the control plane in a public cloud like AWS, Azure, or GCP. This model offers speed and scalability, as the cloud provider manages the underlying infrastructure, allowing teams to focus on building APIs.

The trade-off is a loss of some control, and data residency can become a challenge. Transferring sensitive data from a mainframe to a public cloud for processing is not feasible for many organizations, especially under regulations like GDPR that have strict rules about personal data location.

Hybrid Deployment The Fastest-Growing Model

For most enterprises modernizing legacy systems, the hybrid model is the most practical path. In this model, the API gateways (runtime) are deployed on-premises, close to the legacy systems. The management and analytics console (control plane) is a cloud service.

This configuration offers a balance of benefits. All sensitive data and traffic remain within the corporate firewall, satisfying security and compliance requirements. At the same time, developers use a modern cloud interface for managing APIs, monitoring performance, and setting policies, without the organization having to maintain the management software.

The API management market is expected to reach USD 51.11 billion by 2033. While cloud deployments constitute 80.10% of the market today, the hybrid model is the fastest-growing segment with a 21.90% CAGR. This growth indicates that organizations recognize the risks of a “lift and shift” to the cloud for their most critical legacy systems. You can dig into these API management market trends on imarcgroup.com.

This trend shows that hybrid architecture is a deliberate strategy for achieving modern agility without sacrificing the stability of core business systems.

Selecting the best model requires a thorough analysis of security, compliance, performance, and operational capacity. The table below outlines the key decision criteria.

API Management Deployment Model Selection Criteria

| Criteria | On-Premises | Cloud-Native | Hybrid |

|---|---|---|---|

| Data Sovereignty & Compliance | Highest Control. All data and traffic remain within the private network. Suitable for GDPR, FedRAMP, HIPAA. | Lower Control. Data is processed in the cloud provider’s region. Requires careful configuration. | High Control. Sensitive data traffic remains on-premise; only metadata is sent to the cloud management plane. |

| Security Control | Maximum. The organization controls the entire stack, from physical hardware to network security policies. | Shared Responsibility. The organization secures its configurations; the cloud provider secures the underlying infrastructure. | Balanced. The organization controls gateway security on-premise, while the cloud provider secures the management plane. |

| Latency | Lowest. Gateways are co-located with backend legacy systems, enabling sub-millisecond latency. | Variable. Latency depends on the network path from the data center to the cloud provider. | Lowest. Gateways processing traffic are located next to core systems, same as on-premises. |

| Operational Overhead & TCO | Highest. The organization is responsible for all hardware, software, patching, and maintenance. High upfront CapEx. | Lowest. No infrastructure management. Pay-as-you-go model (OpEx) with minimal upfront cost. | Medium. No overhead for the management plane, but the on-premise gateway infrastructure must still be managed. |

| Scalability & Agility | Low. Scaling is slow and requires hardware procurement. Feature updates depend on vendor release cycles. | Highest. On-demand scalability. Fast access to new features and services. | High. A cloud-based control plane provides agility, while on-premise gateways can be scaled based on internal capacity. |

| Vendor Lock-In | Medium. Tied to the vendor’s software, but the infrastructure is owned and can be migrated. | High. Deep integration with a cloud provider’s ecosystem can make switching difficult and costly. | Medium. The control plane is vendor-specific, but runtime gateways can sometimes be changed with less effort. |

The right choice depends on practical needs rather than trends. The rapid adoption of the hybrid model shows that balancing on-premise security with cloud agility is often the most effective strategy for legacy modernization.

Practical Guidance on Vendor and Partner Selection

Selecting an API management platform like MuleSoft or Apigee is only the first step. The success of an API-led modernization project often depends on the implementation partner.

A platform is a set of tools. The partner provides the architectural discipline, governance, and experience to prevent common pitfalls.

Vetting Your Implementation Partner

Choosing a partner based solely on a sales presentation or a list of certifications can be a mistake.

A qualified partner should have experience integrating with systems similar to yours, not just modern SaaS platforms. Their answers to specific, technical questions are revealing.

- Legacy System Specificity: “Provide an example of a project where you integrated with a Unisys mainframe using its native communication protocols, not a generic database adapter.”

- Architectural Discipline: “How do you define API contracts and domain boundaries to prevent the creation of a distributed monolith? What is your methodology?”

- Governance Framework: “What is your specific governance model for preventing API sprawl? How do you enforce standards without impeding development velocity?”

Effective partners will discuss governance, the API-as-a-Product mindset, and managing domain boundaries. A partner who only focuses on the tech stack may lack the strategic perspective required. This vendor due diligence checklist provides a framework for these conversations.

When Not to Use API-Led Connectivity

This strategy is not universally applicable. Using it in the wrong context can lead to increased costs and technical debt.

There are scenarios where this approach is not the right tool for the job:

- The Legacy System is Unstable: If a core system suffers from frequent outages, data corruption, or performance issues, wrapping it with an API will only expose its instability to more consumers. The core system must be stabilized first.

- The Business Process is Obsolete: If the business process supported by the legacy system is fundamentally flawed, automating it with an API will not fix the underlying problem. The process should be modernized before the technology that supports it.

- No Appetite for an ‘API-as-a-Product’ Culture: If the organization is unwilling to treat APIs as strategic assets with product managers, lifecycle management, and governance, the initiative is likely to fail. This is a cultural shift, not just a technology project.

Unpacking the Fine Print: Your Questions Answered

Here are answers to common questions from teams evaluating an API-led approach to legacy modernization.

How Is This Different From Traditional SOA?

On the surface, Service-Oriented Architecture (SOA) and API-led connectivity appear similar, as both involve exposing services. The key difference lies in philosophy and execution.

Traditional SOA was often a top-down, centrally planned system that relied on heavy protocols like SOAP/WSDL, requiring specialized expertise. In contrast, API-led connectivity is more decentralized and product-focused, favoring lightweight, developer-friendly standards like REST/JSON.

The three-tier model (System, Process, Experience) also provides an architectural discipline often lacking in SOA projects. It offers a clear blueprint for separating system access from business logic and consumer-facing experiences, helping to prevent the tightly coupled architectures that many SOA initiatives became.

What Are The Most Critical Team Skills We Need?

A new platform like MuleSoft or Apigee is not a solution in itself. The team must have the right underlying skills.

The most important skills include:

- API Design Principles: The ability to create clean, intuitive, and secure RESTful APIs. This involves designing contracts that are easy for other developers to consume.

- Data Modeling: Expertise in defining clear JSON schemas is crucial to avoid data consistency issues between old and new systems.

- Security Protocols: Deep knowledge of modern standards like OAuth 2.0 and OpenID Connect is non-negotiable for protecting valuable legacy data.

- DevOps Practices: Experience with CI/CD pipelines for automated testing and deployment is essential for delivering APIs at the speed the business requires.

For a primer on these topics, this a beginner’s guide to seamless API integration is a useful resource.

Can We Do This Without An Expensive API Management Platform?

Technically, it is possible to build a solution using open-source API gateways like Kong or Tyk. However, this approach often has hidden costs.

While you save on licensing fees, the Total Cost of Ownership (TCO) can quickly spiral. The internal effort required to build, maintain, and scale surrounding capabilities like a developer portal, analytics, and advanced policy enforcement is substantial. These are the features that commercial platforms provide out-of-the-box.

Opting for an open-source solution means the organization must build and support an internal API platform, which can divert resources from core business objectives.

What Is A Realistic First Project?

The initial project should be a “quick win” with low technical risk but high business visibility. This builds momentum and demonstrates the value of the approach to stakeholders.

A classic starting point is creating a “Customer 360” API. This project typically involves combining customer data from two or three legacy systems, such as a CRM, a billing system, and an order database. The goal is to expose a unified view of the customer to power a new mobile app or a sales dashboard. It delivers a tangible result that is easily understood and appreciated by the business.

Making the right modernization decision requires unbiased, data-driven intelligence. Modernization Intel provides deep research on 200+ implementation partners, real cost data, and candid failure analysis to help you choose the right vendor for your legacy systems, confidently.