67% of Enterprise Software Projects Fail. Here's How to Choose a Partner Who Won't.

An enterprise software development company isn’t just a team of coders. They are the specialists contracted to build, overhaul, or maintain systems that run an entire business—ERPs, CRMs, or core banking platforms. This is mission-critical work that demands verifiable technical depth, stringent security, and a plausible plan for long-term scale.

The High Stakes of Choosing a Software Partner

Selecting an enterprise software partner is a multi-million-dollar strategic decision with board-level visibility. According to market analysis, global enterprise software spending is projected to grow from $316.69 billion in 2025 to $403.90 billion by 2030.

This growth is driven by a strategic shift to cloud-based solutions, which now account for over 55% of all enterprise systems. This proliferation of options creates significant noise, making it difficult to differentiate between competent partners and those with slick sales pitches. Relying on unverifiable case studies is a high-risk approach, especially when project failures are common.

Moving Beyond Generic Vendor Lists

The primary challenge isn’t finding a list of companies. It’s identifying the right one for a specific, high-stakes problem.

A firm effective at building a greenfield, cloud-native application may lack the specific expertise for a complex COBOL to Java mainframe migration. This critical distinction is often obscured in marketing materials.

A vendor’s stated capabilities are often aspirational. The real test is their documented track record of solving problems at a similar scale and complexity to yours, backed by client references and a transparent cost model.

This guide provides a structured evaluation process for engineering leaders who must make defensible, data-driven decisions. It examines technical capabilities, financial stability, and operational maturity.

Implementing a robust third-party risk management framework from the outset is a critical component of this process. For a detailed guide on structuring the evaluation, see these software procurement best practices.

Mapping Your Needs to Vendor Specialization

A frequent mistake is issuing an RFP without a clear internal definition of the required engagement type. Not all partners are structured the same. A firm that excels at staff augmentation is fundamentally different from one that delivers full-scope projects. Aligning internal needs with a vendor’s core business model is the critical first step.

A legacy system migration has different requirements than building a new cloud application. The process requires a structured approach: internal analysis, market evaluation, and then selection.

This methodical flow—from internal needs analysis to final partnership—helps prevent reactive decisions that often lead to failed projects.

Choosing the Right Engagement Model

“Outsourcing” is too generic a term. There are three primary engagement models, each solving a different problem.

-

Staff Augmentation: Best for filling a specific, temporary skill gap in an existing, managed team. For example, hiring a senior Java developer with Kafka experience for six months. The burden of project management, architecture, and quality rests entirely on the client. Attempting to build a full team this way without strong internal leadership often fails.

-

Managed Teams: A middle-ground model where the vendor provides a dedicated, cohesive team that they co-manage with the client. This is suitable when the client owns the product roadmap but needs a partner to handle day-to-day execution. It requires tighter collaboration than project-based outsourcing.

-

Project-Based Outsourcing: Best suited for well-defined projects with a fixed scope and clear deliverables. The client provides requirements, and the vendor delivers the finished product. The primary risk is rigidity; scope creep can lead to significant and costly change orders if requirements are not thoroughly defined upfront.

Aligning Specialization with Project Complexity

Beyond the engagement model, vendor specialization is a critical filter. A generalist firm claiming expertise in all areas is seldom the right choice for a high-stakes enterprise project.

To illustrate, here is a mapping of common projects to required partner specializations.

Vendor Specialization Mapping Matrix

| Project Type | Required Specialization | Common Pitfall to Avoid |

|---|---|---|

| Legacy Mainframe Modernization | FinTech / Core Systems Migration | Hiring a generalist “Java shop” that does not understand mainframe data types (COMP-3) or transactional integrity. |

| Greenfield Cloud-Native SaaS App | Cloud-Native Development / DevOps | Picking a firm whose “cloud” experience is limited to lift-and-shift migrations, not building scalable platforms. |

| Custom ERP Development | Business Process Automation (BPA) / Systems Integration | Choosing a partner focused on front-end UI/UX but lacking experience in complex backend logic and integrations. |

| AI/ML Model Integration | MLOps / Data Engineering | Selecting a data science consultancy that can build models but cannot deploy, monitor, and scale them in production. |

| Mobile Banking App | Secure Mobile Development / FinTech | Partnering with a consumer app agency that underestimates security, compliance (PCI DSS), and core banking integration requirements. |

This matrix highlights the need for deep, not broad, expertise.

Scenario 1: Core Banking System Modernization

The goal is to migrate a 30-year-old COBOL-based platform to a modern Java microservices architecture. This involves handling archaic data types like COMP-3 (packed decimals) and ensuring transactional integrity.

- The Wrong Partner: A large, generalist IT outsourcer with “Java” and “Cloud” listed as services but no verifiable case studies in mainframe modernization for financial institutions.

- The Right Partner: A specialized firm with a portfolio of FinTech legacy migrations. They will ask about decimal precision handling and the necessity of using

BigDecimalin Java. They have prior experience with the specific technical challenges.

Scenario 2: Greenfield Cloud-Native ERP

Building a new, multi-tenant SaaS ERP from the ground up on AWS. Success depends on scalability, multi-region deployment, and a robust CI/CD pipeline.

- The Wrong Partner: A firm whose experience is primarily “lift-and-shift” cloud migrations or maintaining on-premise applications.

- The Right Partner: A partner with certified AWS architects and a proven history of building and managing scalable platforms. They will discuss infrastructure-as-code (Terraform), container orchestration (Kubernetes), and designing for failure.

The principle is to match the vendor’s demonstrated history, not their marketing deck, to your project’s specific risk profile. A partner’s specialization is their most valuable asset—and your most important selection criterion.

Executing a Rigorous Technical Due Diligence

A vendor’s tech stack—Java, Python, AWS, Kubernetes—is table stakes. Technical capability is not about what technologies they use, but how they use them. A disciplined due diligence process is required to differentiate between a genuine enterprise partner and a well-marketed team of contractors.

Initial meetings are often with sales engineers. Insist on speaking directly with the proposed technical architect and lead engineers who will be responsible for the project’s success. This is non-negotiable.

Probing Beyond Surface-Level Competence

Once the engineers are in the room, questions should be specific, open-ended, and grounded in real-world scenarios. Avoid generic questions like, “What’s your experience with microservices?”

Instead, probe their processes and problem-solving approaches to expose their engineering maturity:

- Code Quality & Standards: “Walk me through your code review process. How are standards enforced, and what is the protocol for a disagreement between a senior and junior developer on a pull request?”

- CI/CD & DevOps Maturity: “Describe your process for handling a critical dependency vulnerability, like a Log4j issue, in a multi-repository project. What is your SLA for identification, patching, testing, and deployment?”

- Architectural Philosophy: “What are the specific conditions under which it is appropriate to introduce a message queue like Kafka? What are the primary failure modes you design for when implementing one?”

- Testing Strategy: “What is your team’s philosophy on test coverage? Describe a situation where 100% unit test coverage was the wrong goal and explain why.”

Their answers should demonstrate an understanding of trade-offs, a skepticism of unnecessary complexity, and a focus on operational reality. For more, here is a complete technical due diligence checklist.

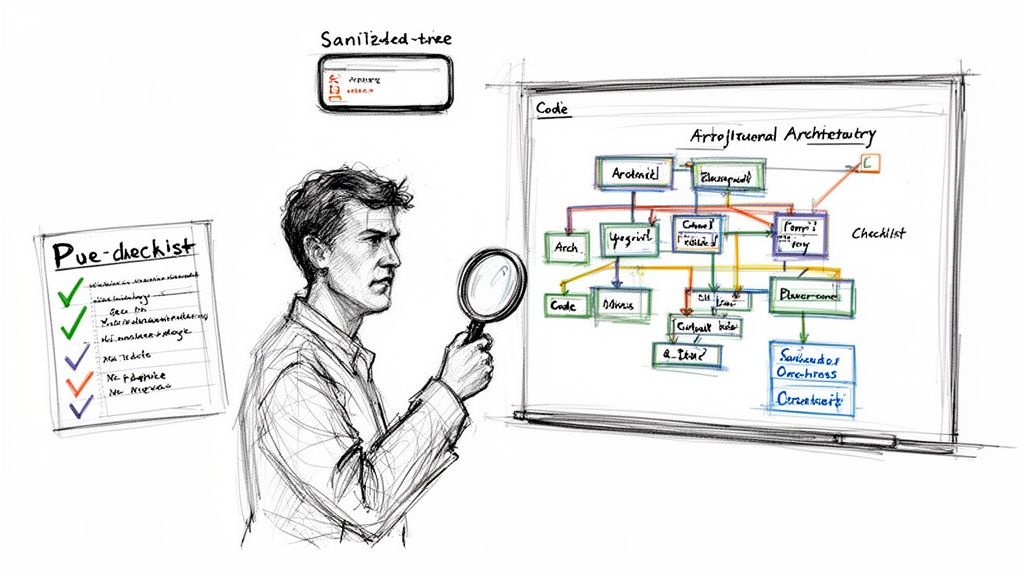

Reviewing Artifacts: Code and Architecture

Request sanitized artifacts from past projects that are similar in scale and complexity. A vendor’s reluctance to share these is a significant red flag.

1. Code Samples Review a pull request or a small, self-contained module. You are not looking for perfection, but for evidence of a disciplined engineering culture.

What to look for:

- Clarity and Readability: Is the code self-documenting? Are variable names logical?

- Error Handling: Are exceptions handled gracefully or allowed to bubble up?

- Testing: Are there meaningful unit and integration tests? Is it clear what they are testing?

- Commit History: Do commit messages provide context, or are they uninformative (e.g., “fixed bug”)?

2. Architectural Diagrams A good architecture diagram should clearly articulate system boundaries, data flow, and technology choices.

What to analyze:

- Appropriate Complexity: Does the architecture fit the problem, or is it over-engineered?

- Failure Planning: Does the diagram account for failure with patterns for redundancy, retries, and circuit breakers?

- Security Considerations: How are authentication, authorization, and data encryption represented? Is security integrated or an afterthought?

A vendor’s hesitation to share sanitized code or architecture diagrams is a strong signal. It often means they lack confidence in their own standards or that their best work was done by engineers who are no longer with the company.

The Live Problem-Solving Session

This is the final test. Present the vendor’s proposed tech lead with a simplified but real problem your team is facing. This is a collaborative problem-solving exercise, not an algorithm quiz.

For example: “We need to design a system to process and validate 10 million inbound data records daily from a third-party API with a 99.9% uptime requirement. The records must be enriched with internal data before being stored. Sketch out the high-level components and identify your top three technical risks.”

Observe their approach. Do they ask clarifying questions first? Do they consider scalability, cost, and maintenance? Or do they jump to a solution based on their preferred tech stack? This exercise reveals their thought process under pressure.

Decoding Cost Models and Uncovering Hidden Fees

Vendor proposals often obscure total project costs. Understanding how prices are structured is the first step to controlling the budget.

There are three dominant cost structures, each shifting financial risk differently.

Dissecting the Three Core Pricing Models

1. Fixed Price This model seems safest: one price for a defined scope. It is suitable for projects with clear, unchanging requirements. Its weakness is rigidity. Any deviation triggers a change order, which is a primary profit center for vendors. A $500K fixed-price project can grow to $750K through change orders.

2. Time and Materials (T&M) You pay for hours worked at a set rate (e.g., Senior Engineer at $150/hr). This offers maximum flexibility for complex projects with evolving requirements. However, the cost risk is 100% on the client. Without disciplined project management and clear velocity metrics, costs can escalate.

3. Value-Based The fee is tied to a specific business outcome, such as a “a 15% reduction in call center handle time.” This aligns incentives but can be difficult to implement. Defining and measuring “value” is challenging and requires a high degree of trust.

A vendor’s preferred cost model indicates their confidence. A firm insisting on T&M for a well-defined project may be unprepared. One pushing a fixed price for a vague R&D initiative may be planning to profit from change requests.

Exposing the Hidden Line Items

The initial quote is rarely the final cost. Demand a fully-loaded estimate that accounts for common omissions.

Your RFP should require vendors to explicitly price these items:

- Environment Setup and Teardown: Time to configure dev, staging, and UAT environments.

- Data Migration: The cost to extract, transform, and load legacy data. This is frequently underestimated.

- Third-Party Licensing: Costs for APIs, libraries, or other software dependencies.

- Project Management Overhead: Typically 15-20% of project cost, this should be itemized.

- Post-Launch Hypercare: The level, duration, and cost of support after go-live.

- Onboarding and Knowledge Transfer: Billable time for their team to learn your systems and for your team to learn the new solution.

Also, verify their expertise in cloud cost optimization strategies to avoid a system with a crippling monthly cloud bill.

A vendor’s resistance to providing this level of detail suggests they plan to introduce these costs later. For context, compare these costs to typical business consulting fees.

Establishing Realistic Cost Benchmarks

Industry benchmarks provide a sanity check against inflated quotes. For legacy modernization projects, a common metric is cost per line of code (LOC). A typical COBOL-to-Java migration can range from $1.50 to $4.00 per LOC. A quote of $8.00/LOC requires a data-driven justification based on complexity or specialized tooling.

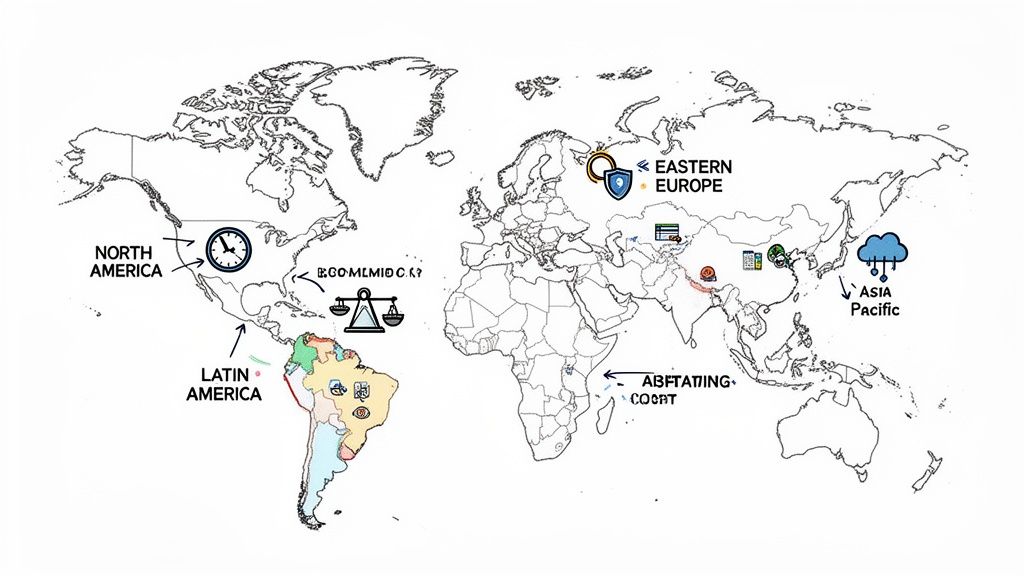

Evaluating Global Delivery Models and Regional Strengths

A partner’s location is a strategic lever that impacts cost, collaboration, and available expertise. Focusing solely on the lowest hourly rate often leads to project failure.

While North America holds 42.7% of the software development market, Asia Pacific is the fastest-growing hub at 27.8%. Enterprise software spending in the U.S. is $868.40 per employee, while in Europe it’s $157.90—a 5.5x difference. These economic disparities create distinct pockets of specialization. For more detail, see the software market dynamics on coherentmarketinsights.com.

Geography is often a proxy for capability. A partner’s location provides insight into their strengths and potential challenges.

Matching The Region To The Problem

A low-cost provider may look good on a spreadsheet, but if they lack the required domain expertise, initial savings will be consumed by rework and missed deadlines. It is more effective to map the region to the project’s risk profile.

For modernizing a core FinTech platform with complex compliance rules, Eastern Europe is a strong candidate due to its concentration of talent in mathematics, engineering, and financial systems. For an agile project requiring tight, real-time collaboration with a U.S. team, a partner in Latin America is often a better choice due to minimal time zone differences.

Choosing a delivery location isn’t just about managing time zones. It’s about aligning your project’s specific risk profile—be it technical complexity, regulatory hurdles, or communication intensity—with a region’s demonstrated strengths.

Regional Delivery Hub Comparison

This breakdown provides a balanced view of the pros, cons, and typical costs for each major delivery hub. Rates are approximate for senior talent and can vary based on the specific tech stack.

| Region | Primary Strengths | Common Challenges | Typical Rate Range (Senior Dev/hr) |

|---|---|---|---|

| Eastern Europe | Deep technical expertise, particularly in FinTech, big data, and security. Strong STEM education. | Geopolitical instability can pose risks. Higher rates compared to Asia and LATAM. | $55 - $95 |

| Latin America | Excellent time zone alignment with North America. High cultural affinity and strong English proficiency. | Talent pool can be less concentrated than in Eastern Europe. Infrastructure maturity varies by country. | $50 - $85 |

| Asia (India/Vietnam) | High scalability and cost-effectiveness. Large talent pool for process-driven projects. | Significant time zone differences (10-12 hours) create communication lags. Cultural differences can impact collaboration. | $30 - $55 |

| North America | No time zone or cultural barriers for U.S. clients. Suitable for projects requiring on-site presence or handling sensitive data. | Highest cost structure globally, often 2-3x more than other regions. | $120 - $200+ |

There is no single “best” region. The choice depends on the project’s specific needs. An enterprise software development firm in Poland might be ideal for a complex banking platform, while a team in Colombia could be a better fit for a fast-moving SaaS application. The goal is to make a strategic choice, not just a cheap one.

Frequently Asked Questions

What Is the Biggest Red Flag When Vetting a Software Vendor?

The single biggest red flag is a lack of transparency. This manifests as evasiveness and often points to a deeper issue with delivery capability.

Examples include vague answers about team composition (“our best people will be on it”), marketing-heavy case studies with no hard numbers, and blocking access to senior engineers.

A trustworthy partner is transparent. They will discuss projects that went wrong and the lessons learned. They will introduce you to the actual engineers who will work on your project. Evasive answers suggest they may be hiding a bait-and-switch on talent or weak engineering practices.

Another significant warning sign is a refusal to provide sanitized code samples or architectural diagrams. This indicates either a lack of confidence in their engineering standards or that the talent responsible for their best work is no longer with the company.

How Should I Structure a Pilot Project to Test a Potential Vendor?

A pilot project should be a meaningful, self-contained slice of the real project, not a proof-of-concept. The goal is to simulate genuine pressure and observe the team’s performance.

Agree on clear, measurable success criteria beforehand. For example, “Deliver a functional user authentication module with 90% unit test coverage within four weeks.”

During the pilot, evaluate:

- Communication Cadence: How are blockers flagged? Is progress reporting clear and consistent?

- Problem-Solving: How do they handle technical snags or ambiguous requirements? Do they seek clarification proactively?

- Code Quality: Does the delivered code meet the standards discussed during the sales process?

The pilot is a test of their communication and project management capabilities, not just their coding ability.

When Should We Build In-House Instead of Hiring an Outside Firm?

The decision hinges on whether the software is a core strategic differentiator.

Build in-house when:

- The software provides a direct competitive advantage (e.g., a high-frequency trading algorithm).

- The required domain knowledge is unique to your business and difficult to transfer.

- The system requires constant, long-term iteration and is deeply integrated with internal teams.

Hire an enterprise software company when:

- You need specialized expertise you lack (e.g., mainframe modernization, MLOps).

- The project has a defined scope and a firm deadline, and you need to move faster than you can hire.

- The system is complex but not a core differentiator (e.g., an internal ERP customization).

Using a partner for well-defined, non-core projects frees up in-house talent to focus on work that provides a market advantage.

Making the right choice requires unbiased, comprehensive data. At Modernization Intel, we provide deep intelligence on 200+ implementation partners, so you can see beyond the sales pitch and make decisions based on real performance metrics. Get your vendor shortlist.