Applying Domain-Driven Design to Legacy Systems: A Pragmatic Guide

Applying Domain-Driven Design (DDD) to a legacy system isn’t just another modernization tactic; it’s a strategic framework for untangling old code and aligning it with how your business actually works today. Most DDD guides assume a greenfield project. This one doesn’t.

The process is tactical: first, rediscover the true business domain buried in the old code through collaborative workshops. Then, isolate new development with Anti-Corruption Layers. Finally, chip away at the old system, piece by piece, using patterns like Strangler Fig. Skipping these steps is the fastest way to build new code that mirrors old misunderstandings, perpetuating the technical debt you set out to eliminate.

The Hidden Risks in Modernizing Monolithic Systems

Most large-scale legacy modernization projects fail to deliver on their promises. The real danger isn’t the tech stack you choose. The project-killer is inheriting the flawed assumptions and decades of undocumented patches baked into the original system.

Too many teams jump straight into coding. They aim for a “lift and shift” or a rapid rewrite, only to wake up 18 months and $2.3M later with a distributed monolith. It’s the same tangled mess, just spread across a dozen microservices that are now infinitely harder to debug.

The root of the problem is a widening gap between the codebase and the real, living business it’s supposed to support. The architecture that made sense a decade ago has drifted. Without a disciplined approach, your new system will just be a mirror of those old, expensive misunderstandings.

The Cost of Misaligned Modernization

This isn’t just a technical headache; it’s a direct hit to the bottom line. The classic failure is the “Big Bang” rewrite. Teams spend 2-3 years in a development tunnel, delivering zero business value until the high-risk launch day.

All the while, you’re still paying to keep the lights on for the old system, and the business itself keeps evolving. By the time you launch, your new platform is often already out of date.

A modernization project that doesn’t start with a deep understanding of the business domain is just an expensive way to perpetuate technical debt. The goal isn’t to replace old code with new code; it’s to replace an old model with an accurate one.

A Strategic Framework for Success

This is exactly where domain-driven design for a legacy system provides a strategic advantage. DDD forces you to pause development and engage domain experts to map the real-world domain first.

Only then can you make smart, incremental bets instead of gambling the entire budget on one high-stakes rewrite. You can layer this with other proven application modernization strategies to create a structured, defensible plan.

This guide provides a battle-tested path forward. We’re moving past the textbook definitions to give you actionable steps for finding domain boundaries, shielding new code from legacy cruft, and—most importantly—delivering tangible business value every step of the way.

Prioritizing Domain Discovery Over New Code

The single biggest mistake teams make when modernizing a legacy system is starting to write code too soon. The urge to build a new microservice or refactor a complex database schema is strong, but it skips the most critical step: understanding what the business actually does today, not what the system was designed to do 20 years ago.

A domain-driven design legacy system approach forces you to start with collaborative domain discovery workshops. You engage domain experts, developers, and stakeholders in EventStorming or Domain Storytelling sessions to uncover the true business domain.

A modernization project that begins without deep domain discovery is destined to build a distributed monolith. You will spend millions to recreate old problems on a new platform, perpetuating the very technical debt you aimed to eliminate.

The first phase of the project has one job: unearth the true business domain and purify legacy assumptions. Skip this and risk building new code that mirrors old misunderstandings.

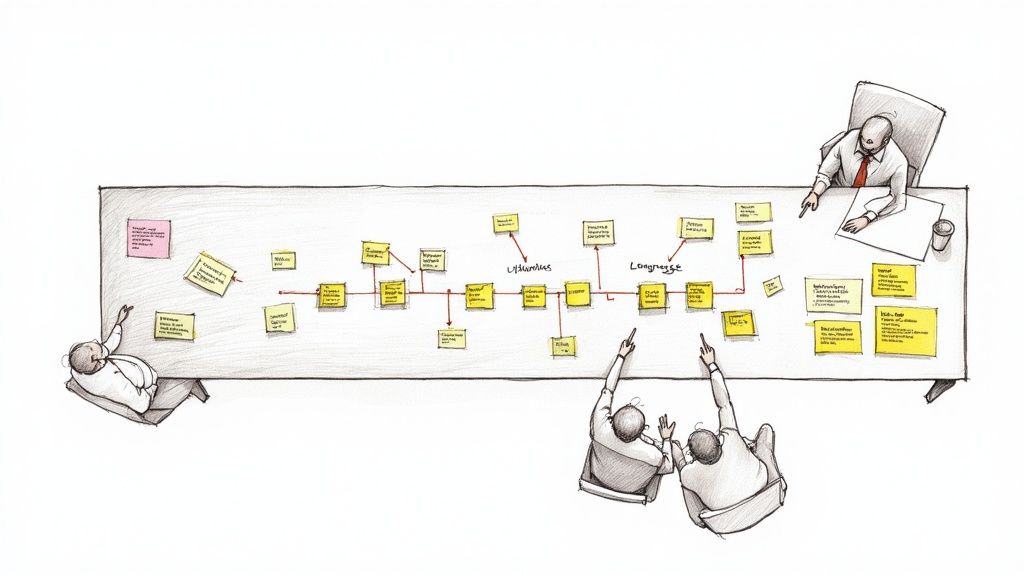

Running Collaborative Discovery Workshops

The fastest way to map the domain is through interactive workshops. Get developers, stakeholders, and—most importantly—the actual domain experts into the same room. Two techniques are particularly effective here: EventStorming and Domain Storytelling.

- EventStorming: A high-energy, sticky-note-driven workshop where you map business processes as a series of domain events like “Order Placed,” “Payment Processed,” and “Shipment Dispatched.” It’s a bottom-up approach that’s effective at exposing hidden complexities and edge cases that your legacy code completely obscures.

- Domain Storytelling: A more top-down approach where domain experts narrate a business process. As they talk, developers and analysts create a visual map of how different actors interact to get work done. It’s excellent for clarifying roles and responsibilities.

The point isn’t to create perfect diagrams. It’s to build a shared understanding and challenge the fossilized assumptions that have been turned into code. You’ll be shocked when a process documented as five steps has morphed into a twelve-step process involving three different spreadsheets and a manual override only one person in accounting knows.

Forging the Ubiquitous Language

A direct result of these workshops is the Ubiquitous Language—a single, shared vocabulary between developers and domain experts. This isn’t just a glossary of terms; it’s the linguistic foundation for the entire project.

When a sales expert says “customer,” and an accounting expert says “client,” do they mean the same thing? Your legacy system probably has one giant Customer table that mashes these two concepts together. The Ubiquitous Language forces precision.

- In the Business: Terms must be precise and have one meaning.

- In the Code: These exact terms must show up in your class names, methods, and schemas.

- In Conversation: The entire team agrees to use this language exclusively.

This shared vocabulary is the bedrock of DDD. It eliminates the error-prone translation that happens when business requirements get turned into technical specs. If the business calls it a “Policy Endorsement,” your code better have a PolicyEndorsement class, not a generic PolicyUpdateRecord.

Purifying Legacy Assumptions

Legacy systems are time capsules of old business models. They are packed with logic that is no longer relevant, or worse, is actively holding the business back. The discovery workshops act as a filter, helping you separate today’s business reality from yesterday’s baggage.

For instance, a 15-year-old e-commerce system might have incredibly complex logic for handling mail-in checks. This feature might now account for less than 0.1% of revenue but creates a massive maintenance headache.

Without this discovery phase, a team might spend months faithfully porting that obsolete feature into a new microservice. Discovery allows a data-driven decision to kill it, simplifying your new architecture and freeing up the team to work on features that generate revenue today.

Map Your Monolith Against Business Reality

The event storming sessions are done. The team finally sees the true shape of the business domain. The temptation to jump into designing new microservices is almost overwhelming. Don’t do it.

The next step is to map the current architecture against these rediscovered domains. You document the legacy “as-is” monolith’s big ball of mud using C4 models or context mapping; identify subdomains (core, supporting, generic) and bounded contexts to reveal misalignment. This map becomes a powerful tool. Executives use this to quantify modernization ROI by prioritizing high-business-impact areas first.

Visualizing the “As-Is” Architecture

Documenting a legacy system requires more than a stale component diagram. The C4 model is a solid starting point for visualizing architecture at different levels of detail (System, Container, Component, Code).

Pair this with Context Mapping, a core DDD pattern for identifying the invisible fences and tribal boundaries inside the monolith. Hunt for clues like:

- Linguistic divides: Places where the word “Customer” means one thing in billing and something completely different in shipping.

- Data contention: Spots where different parts of the code are in a constant tug-of-war over the same database tables.

- Team boundaries: Natural seams often appear around the parts of the system that different teams own.

Creating these maps moves you from a vague feeling of “our system is a mess” to a concrete artifact that pinpoints the sources of pain.

Classify Subdomains to Focus Your Fire

Not all parts of your business are created equal. Your modernization effort has to reflect this reality, or you’ll burn budget on the wrong things.

Once you’ve mapped the system, classify each part into one of three buckets:

- Core Domains: This is your competitive differentiator. For a fintech, it’s the proprietary credit scoring algorithm. For an e-commerce platform, it’s the recommendation engine. This is where you invest your best engineering talent.

- Supporting Subdomains: These are necessary for business function but offer no competitive edge, like an invoicing system or a company blog CMS. They need to work, but they don’t need to be revolutionary.

- Generic Subdomains: These are solved problems. You should almost never build these yourself in 2024. This includes authentication, sending emails, or processing payments.

Prioritizing core domains accelerates ROI, often showing tangible wins in 6-12 months, which justifies broader investment. Generic/supporting subdomains can stay legacy longer or use off-the-shelf solutions.

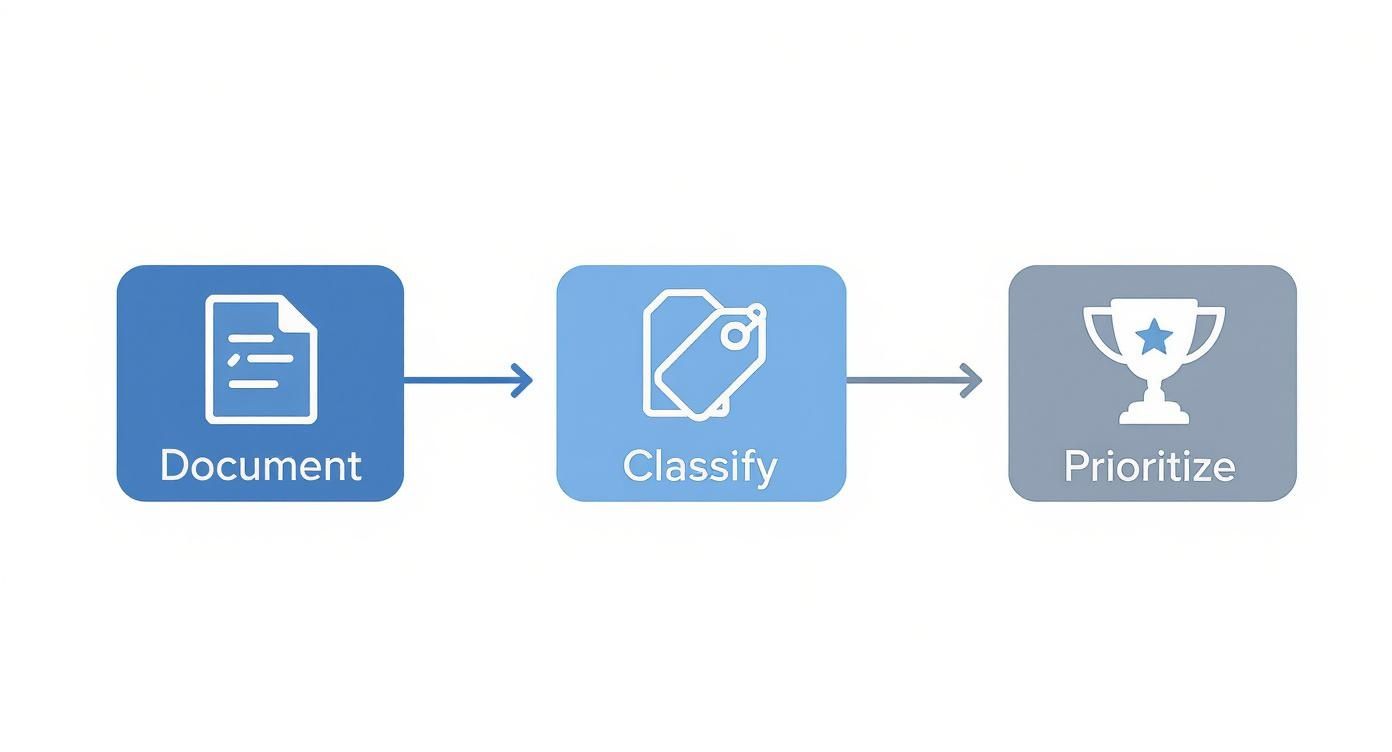

Create a Data-Driven Priority Matrix

With your subdomains classified, you can build a simple but powerful matrix to guide your investment. This framework helps you move past gut feelings and make defensible decisions about where to start. You’ll want to explore various software migration patterns to figure out the right approach for each piece.

This strategic mapping is becoming standard practice. One analysis of 36 studies found that microservices were the focus in a whopping 44% of them, showing just how dominant this pattern is for breaking down monoliths. You can dig into the full research on these DDD implementation trends.

Here’s a practical scoring framework to prioritize modernization targets.

Modernization Priority Matrix

This scoring system moves the conversation from “what we feel is important” to “what the data shows is important.” By multiplying Business Impact by Technical Complexity, you get a clear, quantifiable score to guide your roadmap.

| Subdomain | Type (Core, Supporting, Generic) | Business Impact (1-10) | Technical Complexity (1-10) | Modernization Priority Score |

|---|---|---|---|---|

| Credit Scoring Engine | Core | 10 | 9 | 90 (High) |

| Customer Invoicing | Supporting | 6 | 4 | 24 (Medium) |

| Internal Blog CMS | Supporting | 2 | 3 | 6 (Low) |

| User Authentication | Generic | 8 | 2 | 16 (Outsource/Buy) |

The results immediately clarify where to focus. High-impact, high-complexity Core domains are your primary targets. Low-scoring or Generic subdomains are candidates for outsourcing or replacement with an off-the-shelf tool. This map gives you a clear, data-backed rationale to present to executives. It’s not just a plan; it’s a business case.

Building Defenses with Anti-Corruption Layers

You’ve mapped the monolith. Now for execution. How do you build clean, new services without the legacy system’s “big ball of mud” bleeding all over them?

You need to implement Anti-Corruption Layers (ACL) as your primary defense. An ACL is a strategic boundary that acts as a translator between your new domain model and the often-bizarre structures of the legacy system. It is a facade/adapter layer that translates data, APIs, and events to isolate new domain models from legacy contamination.

This layer is what isolates your new bounded contexts. It stops the legacy system’s anemic data models—like a Customer table with 150 nullable columns—from polluting the rich, behavior-focused models you’re building with DDD. Without an ACL, your new code will inevitably compromise to fit the old world, recreating the same problems.

Implementing an Anti-Corruption Layer

The ACL is a dedicated translation service, made up of a few key parts:

- Facade: A clean, simplified interface for your new bounded context. It hides the gnarly details of talking to the legacy system.

- Adapter: Handles the raw communication—calling an old SOAP endpoint, querying a legacy database, or parsing a flat file.

- Translator: The heart of the ACL. It maps the old world to the new. A legacy

status_codeof “PN” becomes a clean, explicitPaymentStatus.Pendingenum in your new model. Making ACLs synchronizing (bidirectional) allows for gradual data migration.

Before writing code for an ACL, you need a plan. Document, classify, and prioritize which parts of the legacy system to tackle first.

This structured approach ensures your engineering time is spent on the highest-impact areas first.

The Strangler Fig Pattern in Action

If the ACL is your shield, the Strangler Fig is your battle strategy. You should adopt the Strangler Fig pattern for zero-downtime replacement. Coined by Martin Fowler, this pattern involves building new bounded contexts or microservices around legacy edges, routing traffic via proxies or API gateways. You intercept requests to redirect new features to modern code while keeping legacy running.

An Anti-Corruption Layer without the Strangler Fig pattern is a well-defended island with no bridge. The Strangler Fig provides the incremental, risk-managed path to migrate users and functionality from the old world to the new, using the ACL as the checkpoint.

Initially, a gateway routes 100% of requests to the monolith. Then, your team builds a new “User Profile” service. You configure the gateway to route all /api/v2/users requests to your new service, while every other request continues to flow to the legacy system, uninterrupted.

De-Risking the Rollout

This gradual routing kills the risk and delivers value from day one. You can control traffic flow with surgical precision.

- Feature Flags: Route traffic based on user segments. Enable the new feature for internal users first, then 5% of external customers. As confidence grows, ramp up the percentage.

- Domain-Based Routing: Route traffic based on user action. For an e-commerce platform, redirect requests for a new “Loyalty Program” domain to the new service, while the critical “Checkout” process stays on the battle-tested legacy system.

This method transforms a multi-year migration into a series of small, manageable, and reversible steps. Route by feature flags or domain to minimize risk and deliver value continuously.

Executing a Long-Term Modernization Strategy

Applying DDD to a legacy monolith is a multi-year marathon. Lasting success comes from strategic and organizational shifts.

Your first move must deliver tangible business value, fast. This means being ruthless about prioritizing your core domains—the parts of your business that provide a competitive edge.

Focus initial efforts on competitive differentiators (e.g., pricing engines in fintech, order fulfillment in e-commerce). This can show a clear, measurable ROI within 6-12 months, justifying the larger investment for the full journey. Supporting or generic subdomains can wait or be replaced by an off-the-shelf solution.

Aligning Teams with Bounded Contexts

Technical boundaries on a whiteboard are not enough. The most successful modernizations align teams vertically around bounded contexts. Reorganize into cross-functional teams owning end-to-end domains (Team Topologies: stream-aligned).

In an insurance company, a “Policy” team should own everything related to the policy bounded context. This structure kills the endless handoffs that plague traditional, layered teams (UI team, API team, DB team). Ignore this and watch your new architecture erode back into a monolith.

Conway’s Law is undefeated: your software architecture will inevitably mirror your communication structure.

When a team owns a domain, they become the undisputed experts. This accelerates delivery and ensures the ubiquitous language stays consistent as new features are built.

Strategic Data Ownership and Migration

This is non-negotiable: a new bounded context must own its data. Letting new services query the legacy database directly creates tight coupling that defeats the purpose of modernization. Synchronize data ownership and migration strategically.

The playbook that works:

- Declare New Source of Truth: The new service’s data store is the authority for its domain.

- Backfill and Synchronize: New contexts own their data stores; backfill from legacy via events or batch jobs, then cut over reads/writes. Use event sourcing where replayability adds value.

- Cutover Reads, Then Writes: First, shift read traffic to the new data store. Once stable, cut over writes.

- Decommission: After a validation period, you can archive that slice of data from the old database.

This approach avoids dual-write pitfalls and enables eventual decommissioning of legacy databases. You can get a deeper look at these methods in our guide on incremental legacy modernization strategies.

Planning for the Hybrid Long Haul

Plan for hybrid operation long-term. A complete replacement often takes 3-5+ years.

This reality means you need robust observability, feature toggles, and well-tested rollback paths. Successful modernizations treat strangling as a marathon, delivering incremental business capabilities while de-risking the journey.

This isn’t just a niche technical problem; it’s a massive market trend. The Domain-Driven Design (DDD) market has a projected CAGR of 23.40%, driven largely by firms tackling their legacy systems. You can dig into more insights about this growing market on HTF Market Intelligence. A successful domain-driven design legacy system modernization delivers continuous business value while carefully de-risking a long and complex journey.

Proving It Works: How to Measure DDD’s ROI and Justify the Investment

How do you prove this multi-year DDD effort is paying off? Your CFO won’t sign a check for year two based on vague promises of “cleaner code.” To justify the long-term investment that a domain-driven design legacy system transformation requires, you need tangible metrics that tie technical wins to business value.

From Code Metrics to P&L Impact

Your best weapon is tracking modularity. Use a concrete metric like a Modularity Maturity Index (MMI). Monitor progress by tracking cohesion, coupling, and consistency quarterly. This provides executives measurable evidence of reduced accidental complexity and lower maintenance costs over 2-3 years.

That MMI chart is your proof. It shows a measurable reduction in the complexity that has been slowing your team.

Then, you translate that chart into business outcomes:

- Faster Feature Delivery: Show how decoupling a specific bounded context directly cut the lead time for a new feature from six weeks to two.

- Lower Maintenance Costs: High cohesion means fewer complex bugs. Track the reduction in P1 incidents or hours spent on operational support for a newly isolated context. That’s a direct cut in opex.

This data changes the conversation. You’re no longer talking about abstract technical goals. You’re presenting an evidence-backed case that the investment is paying off over a 2-3 year horizon. Aim for explicit architectures (hexagonal/clean) over layered spaghetti.

DDD is more than a technical playbook; it’s about creating a shared language and strategic alignment between tech and the business. That alignment stops you from making uninformed bets.

Ultimately, this strategic clarity justifies sustained investment. Global IT spend was projected to top $4.66 trillion in 2023. DDD is how you de-risk your slice of that spend. By showing precisely how architectural changes align with core business goals, you build the confidence needed to secure funding for the entire journey. You can learn more about how DDD gets IT and business leaders on the same page here.

Common Questions About DDD for Legacy Systems

When you bring up Domain-Driven Design for a legacy modernization project, you’re going to get questions. Here are the straight answers to the questions I hear most often.

How Long Does a DDD Modernization Project Typically Take?

This is not a quick win. For a legacy monolith of any real complexity, a full modernization using DDD principles is realistically a 3-5 year journey.

But the goal isn’t a far-off “big bang” launch. Success is measured by shipping new business value, continuously. You should be able to show tangible improvements from modernizing your most critical domains within the first 6-18 months. The project is working if it’s consistently cutting operational risk and letting you ship features faster, even while chunks of the old system are still running.

What Is the Biggest Mistake Teams Make?

The single most common—and expensive—mistake is rushing the domain discovery phase. Teams feel immense pressure to start writing code, so they try to reverse-engineer the domain model by staring at the legacy database schema.

This is a fatal error. It guarantees you will bake outdated business assumptions and broken logic right into your new system. The legacy code is a fossilized record of old requirements; treating it as the source of truth means you’re just pouring old concrete into a new foundation.

You must get domain experts in a room for collaborative modeling sessions like EventStorming. It’s non-negotiable. Skipping this is planning to fail.

Can You Use DDD Without Moving to Microservices?

Absolutely. While DDD and microservices are a great match for a domain-driven design legacy system modernization, the principles themselves are deployment-agnostic. DDD is about taming complexity within the business domain.

You can apply DDD to break a monolith into well-defined, cohesive modules—your Bounded Contexts—that are still deployed as a single application. This “modular monolith” approach can slash complexity, make the system easier to maintain, and act as a strategic stepping stone toward a full microservices architecture if and when it makes business sense.

Making the right call on a modernization partner requires unbiased, data-driven intelligence. Modernization Intel provides deep research on 200+ vendors, offering transparent cost data and honest failure analysis to help you build a defensible vendor shortlist. Learn more about building a vendor shortlist.

Need help with your modernization project?

Get matched with vetted specialists who can help you modernize your APIs, migrate to Kubernetes, or transform legacy systems.

Browse Services