10 Microservices Migration Case Studies That Define the Industry

Migrating from a monolithic architecture to microservices is a high-stakes decision. Over 50% of initial microservices migration projects fail to meet their objectives, often due to poor planning, underestimated complexity, or a fundamental misunderstanding of the operational overhead involved. This listicle moves beyond generic success stories to provide a detailed, tactical analysis of real-world microservices migration case studies.

We will dissect the strategies of pioneers like Netflix and Amazon, but also examine the critical lessons from failures and rollbacks. Each case study breaks down the specific business drivers, the architectural before-and-after, and the measurable outcomes. We analyze the “how”—the specific patterns used (like Strangler Fig), the team structures that enabled success (like Spotify’s squads), and the failure modes that emerged. You will find concrete data on timelines, performance metrics, and the strategic trade-offs involved.

This is not a theoretical exercise. The goal is to equip technical leaders—CTOs, VPs of Engineering, and engineering managers—with a strategic framework grounded in evidence. By exploring both successes and instructive failures, you will gain actionable insights into planning your own migration. This resource provides the behind-the-scenes details needed to evaluate whether a microservices architecture is the right solution for your specific context, and if so, how to execute the transition while mitigating the substantial risks.

1. Netflix: The Canonical Case Study in Resilience

Facing downtime risks from a monolithic DVD rental system, Netflix incrementally migrated non-critical services first over 2-3 years starting in 2009. A 2008 database corruption event that halted business for three days was the catalyst. The goal was not cost savings but extreme availability for their pivot to streaming.

The migration wasn’t a “big bang” rewrite but a gradual strangling of the monolith. This enabled handling billions of daily requests, fault isolation during outages, and independent scaling—delivering 10x faster deployments and establishing chaos engineering practices (e.g., Chaos Monkey) that remain industry-standard for resilient distributed systems.

Strategic Analysis & Breakdown

- Problem: The core issue was not performance but availability. A single database failure brought the entire business down.

- Approach: Instead of a simple “lift and shift,” they re-architected entirely for the cloud, treating AWS as a dynamic, fault-tolerant platform.

- Key Tactic - Chaos Engineering: To ensure resilience, Netflix invented “Chaos Monkey,” a tool that randomly terminates production instances. This forced engineers to design services that could withstand failure from day one.

Actionable Takeaways & Replicable Methods

- Migrate Incrementally: Tackle low-risk, non-customer-facing services first. The full migration took Netflix nearly seven years; patience is a strategic asset.

- Embrace “You Build It, You Run It”: Netflix decentralized ownership, making development teams responsible for the entire lifecycle of their services, from coding to deployment and operations.

- Invest in Tooling: A successful microservices architecture relies on a robust ecosystem of supporting tools. Netflix built its own platform-as-a-service (PaaS) and open-sourced components like Hystrix (fault tolerance) and Eureka (service discovery).

If your organization is grappling with the limitations of a monolithic system, there is much to learn from Netflix’s approach to microservices.

2. Amazon: SOA Driven by Organizational Structure

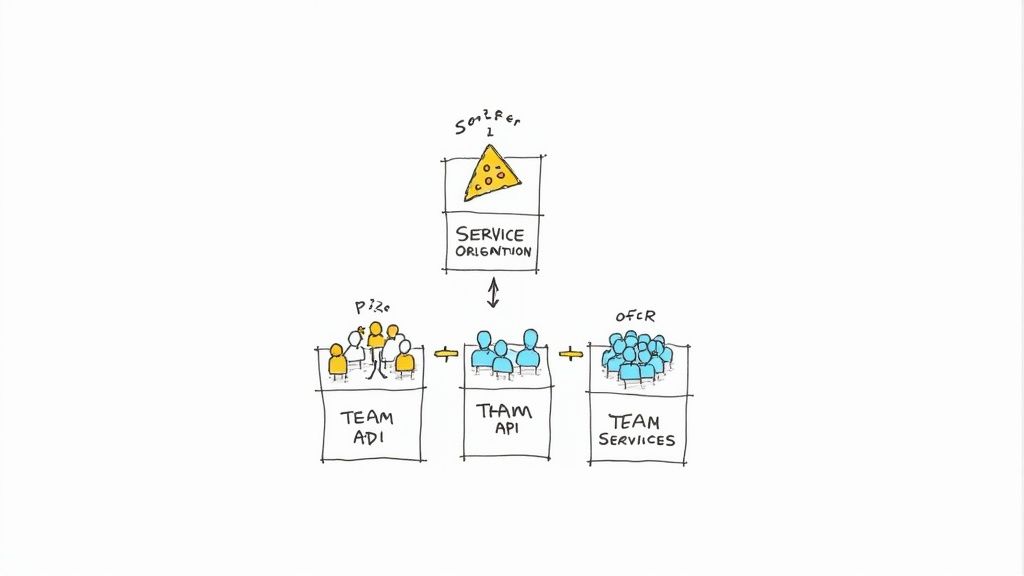

As e-commerce exploded, Amazon’s monolith hindered velocity. In 2002, Jeff Bezos issued a mandate forcing teams to refactor into loosely coupled services with strict APIs. This led to the “two-pizza” team model, where small, autonomous units owned their services end-to-end.

The outcome was a system that could handle peak loads like Prime Day without single points of failure, while fostering ownership that accelerated innovation across thousands of services. This internal service-oriented architecture (SOA) was the foundation for what would become Amazon Web Services (AWS). This microservices migration case study is unique because it was driven by organizational design, not just technology.

Strategic Analysis & Breakdown

- Problem: The primary issue was coordination overhead. A tightly coupled monolithic codebase meant that even small changes required complex coordination between large teams, slowing innovation to a crawl.

- Approach: Instead of just refactoring code, Amazon refactored its organization. The “two-pizza team” model enforced strict ownership and decentralization.

- Key Tactic - The Bezos Mandate: The top-down directive was unambiguous: all teams will communicate via service interfaces, without exception. This eliminated back-channels and direct database access between services, enforcing true architectural separation.

Actionable Takeaways & Replicable Methods

- Align Architecture with Org Structure: Conway’s Law states that systems mirror the communication structures of the organizations that build them. Amazon deliberately inverted this: they structured their organization to achieve the architecture they desired.

- Establish Clear Ownership Boundaries: The “You Build It, You Run It” mantra was central to Amazon’s success. Each two-pizza team was fully responsible for its service’s lifecycle.

- Mandate and Enforce API Contracts: The success of a distributed system hinges on well-defined, stable APIs. Amazon’s strict mandate ensured that services were designed for consumption from the outset.

3. Uber: Global Scaling Through Domain-Driven Microservices

Rapid growth strained Uber’s monolithic backend. To handle global scale, Uber decomposed its architecture by business domains (e.g., rides, payments), adopting SOA principles for independent scaling across regions. This was a strategic necessity to manage millions of concurrent trips with low latency.

The migration achieved sub-second latency for millions of concurrent trips, reduced deployment risks, and enabled easier tech stack evolution per service. However, early over-decomposition into services that were too fine-grained created operational complexity and taught the value of starting with coarse-grained domains.

Strategic Analysis & Breakdown

- Problem: The primary issue was a scaling bottleneck. A single codebase managed all global operations, meaning a bug fix for one city required a full redeployment that risked disrupting service for everyone.

- Approach: Uber adopted a Domain-Driven Design (DDD) approach, breaking the monolith apart along business capability lines. This allowed them to build specialized services optimized for specific tasks, using different technologies (Go for high-performance services, Python for data science) where appropriate.

- Key Tactic - Building a Paved Road: Recognizing the complexity of managing thousands of services, Uber invested heavily in creating a standardized platform-as-a-service (PaaS) to provide developers with common libraries for logging, monitoring, and deployment.

Actionable Takeaways & Replicable Methods

- Decompose by Business Domain: Start with coarse-grained services that map directly to business functions (e.g., Invoicing, User Profiles). Avoid creating services that are too small too early.

- Invest in a Platform Team Early: A dedicated platform team that provides standardized tooling for observability, deployment, and communication is not a luxury; it’s a prerequisite for scaling successfully.

- Standardize Communication Protocols: Uber standardized on technologies like Apache Thrift for RPC and later gRPC. Having a clear, consistent protocol for service-to-service communication is essential to prevent integration chaos.

4. Spotify: Autonomy-Focused Migration

To support explosive user growth without slowing down feature development, Spotify broke its monolith into features owned by cross-functional “squads.” Services communicated via events and APIs, enabling a culture of high-velocity, independent releases that resulted in hundreds of daily deployments.

This approach yielded faster feature rollout for capabilities like personalized playlists, better fault isolation between components, and a cultural alignment where teams own end-to-end responsibility. This minimized coordination overhead and allowed the engineering organization to scale without collapsing under its own weight.

Strategic Analysis & Breakdown

- Problem: The primary bottleneck was not technology but coordination. As the engineering organization grew, a centralized, monolithic codebase created complex dependencies, slowing down feature development.

- Approach: They broke the codebase apart to match the desired organizational structure of autonomous squads. The architecture was designed to serve the teams, not the other way around.

- Key Tactic - The “Squad” Model: Spotify’s architecture is a direct reflection of its culture. Squads own features, “Tribes” are collections of squads in related areas, and “Chapters” group specialists (e.g., backend engineers) across squads.

Actionable Takeaways & Replicable Methods

- Align Architecture with Team Structure: Before you decompose a monolith, map out your desired team structure. This is a core principle explored in how Domain-Driven Design can guide legacy system modernization.

- Invest in a Strong Platform Team: Empowering squads requires giving them a “paved road.” Spotify invested heavily in a platform team that provides shared infrastructure and deployment pipelines.

- Establish Clear Service Contracts: With hundreds of services, clear and stable APIs (REST, gRPC) and event-driven patterns are non-negotiable to avoid a “distributed monolith” anti-pattern.

5. Walmart: E-commerce Revival for Black Friday Peaks

Walmart’s legacy monolith couldn’t handle traffic surges during Black Friday, risking revenue and brand reputation. The company adopted OpenStack and Node.js microservices, routing traffic via proxies for a gradual “strangling” of the legacy system.

The new architecture survived 10x traffic increases without crashes, cut deployment times from weeks to hours, and boosted online sales significantly by enabling real-time inventory and pricing adjustments. This microservices migration case study shows how a legacy retailer can re-architect to compete with digital-native giants.

Strategic Analysis & Breakdown

- Problem: The monolithic architecture created a single point of failure that was especially vulnerable during Black Friday. A failure in one subsystem could cascade, bringing down the entire e-commerce platform.

- Approach: Walmart embraced a domain-driven design (DDD) philosophy, aligning microservices with specific business capabilities. They heavily utilized the Strangler Fig pattern, routing traffic through proxies to incrementally peel off functionality.

- Key Tactic - Platform as a Product: Instead of letting each team build its own infrastructure, Target’s engineering leadership invested in creating an internal, standardized platform that accelerated the migration and ensured consistency across hundreds of new services.

Actionable Takeaways & Replicable Methods

- Align Services to Business Domains: Don’t just break up code; model your services around tangible business functions (e.g., cart, checkout, user profile).

- Build a Paved Road for Developers: A centralized platform team that provides a “paved road” of standardized tools and deployment pipelines is a massive force multiplier.

- Use Proxies for a Gradual Rollout: By placing a proxy layer in front of legacy systems, Walmart could safely route a small percentage of traffic to new microservices, testing in production with a minimal blast radius.

6. BestBuy: The Strangler Pattern for Zero-Downtime Retail Modernization

BestBuy’s monolithic e-commerce site risked major disruptions during updates, a significant liability for a customer-facing retail platform. They incrementally replaced components with microservices hidden behind facades, validating performance and functionality in production before switching over traffic.

This Strangler Fig pattern implementation achieved zero-downtime transitions, improved scalability for promotional events, and made it easier to integrate new features like mobile checkout. The case proves that the strangler pattern is a highly effective, risk-mitigating strategy for migrating critical, customer-facing systems.

Strategic Analysis & Breakdown

- Problem: The primary risk was business disruption. A “big bang” migration of their core e-commerce platform was too dangerous. They needed a method that allowed for safe, incremental modernization without downtime.

- Approach: They adopted the Strangler Fig pattern in its purest form. A proxy layer (the facade) was placed in front of the monolith. New microservices were built to replace specific functionalities, and the proxy was configured to route requests for those functions to the new service while everything else continued to go to the monolith.

- Key Tactic - Parallel Runs and Validation: Before switching traffic, BestBuy would run the new microservice in parallel with the old monolithic function, comparing outputs and performance to ensure parity. This validation step was crucial for building confidence and catching bugs before they impacted customers.

Actionable Takeaways & Replicable Methods

- Identify Clear Seams: The Strangler Fig pattern works best when you can identify discrete, well-defined functionalities within the monolith to “strangle” first. Start with a feature that has minimal dependencies.

- Use a Proxy Facade: An API gateway or a simple reverse proxy is essential for routing traffic between the old and new systems. This layer provides the control needed to manage the gradual migration.

- Validate in Production: Don’t just rely on staging tests. Use techniques like traffic shadowing or parallel runs to validate that your new service behaves correctly under real production load before making the final cutover. To see how this works in practice, you can learn more about the Strangler Fig pattern.

7. Samsung: Database Decomposition for 1.1 Billion Users

A centralized Oracle monolith limited Samsung’s ability to adopt microservices at scale for its 1.1 billion users. The tight coupling at the database layer was a major bottleneck. The solution was to migrate to Amazon Aurora PostgreSQL-compatible instances, breaking data ownership out per service.

This database decomposition slashed costs, enabled independent scaling for the Samsung Account service (handling 80K requests/sec), and supported multi-region resilience. The move away from Oracle also avoided vendor lock-in pitfalls, a key strategic goal.

Strategic Analysis & Breakdown

- Problem: Data coupling. Even with application logic in separate services, a shared monolithic database created a single point of failure and a performance bottleneck, preventing true service independence.

- Approach: They adopted a database-per-service pattern. Each microservice became the sole owner of its own data schema and storage. Communication between services was forced through APIs, never direct database calls.

- Key Tactic - Phased Data Migration: They didn’t migrate all 1.1B users’ data at once. They used a phased approach, synchronizing data between the old Oracle DB and the new Aurora instances, validating consistency before cutting over each domain.

Actionable Takeaways & Replicable Methods

- Break the Shared Database: This is often the hardest but most critical step. Each microservice must own its data. Any form of shared database schema between services is a distributed monolith anti-pattern.

- Use APIs for Data Access: If one service needs data from another, it must go through a well-defined API. Direct database access between services is forbidden.

- Plan for Data Synchronization: During the transition, you will need a robust strategy for keeping data in the old and new databases in sync until the migration for a given domain is complete.

8. HeartFlow: Recovery from a Catastrophic Migration Failure

This case study is a crucial lesson in what not to do. A rushed microservices switch for HeartFlow’s identity management system locked all users out on launch day. The technical failure was compounded by a failure in communication and planning, leading to a catastrophic loss of trust from customers and internal stakeholders.

Recovery involved implementing parallel runs of the old and new systems, fostering a cultural shift toward collaboration between Dev and Ops, and adopting phased rollouts with feature flags. The turnaround restored trust and ultimately achieved the promised scalability for their healthcare portals, highlighting that perception management and team dynamics are as critical as technical fixes.

Strategic Analysis & Breakdown

- Problem: A “big bang” cutover with insufficient testing and no rollback plan. The team was overconfident and underestimated the complexity of migrating a critical system like identity management.

- Approach to Recovery: They immediately rolled back. The recovery plan focused on de-risking the next attempt through technical safeguards (parallel runs, canary releases) and process changes (better cross-team communication, stakeholder updates).

- Key Tactic - Rebuilding Trust: The engineering leader took public ownership of the failure, provided transparent post-mortems, and over-communicated the recovery plan to business stakeholders. This was essential to regain the political capital needed for a second attempt.

Actionable Takeaways & Replicable Methods

- Never Migrate Identity First: Identity and access management is one of the most complex and critical parts of any system. It is a poor candidate for a first microservice.

- Always Have a Rollback Plan: Assume the deployment will fail. Your rollback plan should be tested and automated as much as possible.

- Manage Stakeholder Expectations: A microservices migration is a high-risk project. Be transparent about the risks and have a communication plan ready for when things go wrong.

9. Prime Video: Partial Rollback to a Monolith for Cost Efficiency

In a widely discussed move, Prime Video’s monitoring team consolidated several distributed microservices into a single, modular monolithic application. The microservices-based solution, which used AWS Step Functions and Lambda, incurred high overhead for their specific workload, leading to excessive infrastructure costs.

The consolidation reduced infrastructure costs by over 90% while retaining the scalability needed. This case demonstrates that microservices are not a universal solution and that periodic re-evaluation is necessary. It prevents the “distributed monolith” anti-pattern where services are separate but so tightly coupled that they offer no real benefits, only operational overhead.

Strategic Analysis & Breakdown

- Problem: High operational cost. The “chattiness” between their distributed components and the overhead of the serverless orchestration layer were not a good fit for their high-throughput monitoring workload, resulting in a cost structure that did not scale efficiently.

- Approach: They identified the specific components causing the cost issue and refactored them back into a single, well-structured service. This was not a rejection of microservices entirely, but a pragmatic decision for a specific use case.

- Key Tactic - Cost Analysis: The team performed a detailed cost analysis that directly attributed infrastructure spend to specific architectural choices. This data-driven approach allowed them to make a compelling case for the rollback.

Actionable Takeaways & Replicable Methods

- Microservices are Not Always the Answer: For some workloads, particularly those that are not complex and require high data throughput between components, a well-structured monolith can be more efficient.

- Continuously Evaluate Your Architecture: Architectural decisions are not permanent. Regularly review performance, operational complexity, and cost to ensure your architecture still serves the business needs.

- Cost is a Key Architectural Driver: Don’t treat cost as an afterthought. It should be a primary consideration when designing and evaluating a distributed system.

10. Pipedrive: AWS-Hosted Multi-Microservice Expansion

Pipedrive’s distributed monolith was slowing down growth and innovation for its CRM platform. The company undertook a massive migration of over 500 services to AWS, implementing custom data frameworks and tooling to manage the complexity.

The migration significantly improved API response times, cut per-customer infrastructure costs, and enabled Graviton-based processor optimizations. This allowed the company to sustain 20% annual growth without being constrained by operational bottlenecks, proving that a well-executed cloud-native migration can directly fuel business expansion.

Strategic Analysis & Breakdown

- Problem: A “distributed monolith”. They had many services, but they were tightly coupled with shared libraries and release cycles, negating the benefits of a microservices architecture.

- Approach: A full commitment to a cloud-native architecture on AWS, leveraging managed services where possible. They invested in building a platform team to create custom frameworks for data access and service communication, standardizing how their 500+ services operated.

- Key Tactic - Custom Data Framework: To manage data consistency across hundreds of services, Pipedrive built a custom data access layer. This abstracted away the complexity of their distributed database setup, making it easier for developers to build new services correctly.

Actionable Takeaways & Replicable Methods

- Beware the Distributed Monolith: Simply having many small services doesn’t mean you have a microservices architecture. If they are tightly coupled and cannot be deployed independently, you have the downsides of both architectures.

- Standardize Tooling at Scale: When managing hundreds of services, standardization is key. A platform team providing common libraries, deployment pipelines, and observability tools is essential.

- Leverage Cloud-Native Services: A key part of Pipedrive’s success was fully embracing the AWS ecosystem (e.g., managed databases, Graviton processors) rather than just using the cloud as a simple data center.

Final Thoughts

The path from a monolithic architecture to microservices is less a straight line and more a series of strategic trade-offs, a reality underscored by every microservices migration case study we’ve examined. From Netflix’s foundational shift to handle massive streaming demands to Amazon’s “two-pizza team” philosophy that unlocked unprecedented velocity, a clear pattern emerges: success is not about adopting microservices, but about solving specific, high-stakes business problems. These are not academic exercises; they are calculated responses to tangible threats like system downtime, stunted growth, and the inability to out-innovate competitors.

The Unifying Themes Across Every Case Study

Distilling the lessons from giants like Spotify, Uber, and even the cautionary tales from Prime Video’s partial rollback, we can identify several core principles that transcend industry and scale. These aren’t just best practices; they are the strategic pillars that separate a successful migration from a costly, multi-year distraction.

- Business Drivers First, Technology Second: The most successful migrations began with a clear “why.” Walmart couldn’t survive Black Friday traffic. Spotify needed to accelerate feature delivery to compete. The technology (microservices) was merely the most logical tool to solve a pressing business constraint, not an end in itself.

- Incrementalism Mitgates Catastrophe: The “big bang” migration is a recipe for failure. BestBuy’s textbook execution of the Strangler Fig Pattern and Netflix’s multi-year, non-critical-service-first approach demonstrate the power of gradual, controlled transitions. This methodology de-risks the process, allowing teams to learn, adapt, and build confidence without jeopardizing the entire system.

- Organizational Structure is Architecture: Amazon and Spotify are prime examples of Conway’s Law in action. Their shift to autonomous, domain-oriented teams (“squads” or “two-pizza teams”) was not a byproduct of their architectural change; it was a prerequisite. A monolithic team structure cannot effectively build or maintain a distributed system. The architecture must mirror the desired organizational autonomy and communication patterns.

- Data Decomposition is the Hardest Part: While refactoring application logic is complex, decoupling the monolithic database is often the central challenge. Samsung’s deliberate migration to a distributed database model highlights this critical step. Without a clear data ownership strategy per service, you risk creating a “distributed monolith,” where services are technically separate but remain shackled by a shared, single-point-of-failure database.

Strategic Insight: A microservices migration is fundamentally a de-risking operation. You are trading the known, concentrated risk of a monolith for the distributed, manageable risks of independent services. The goal is not to eliminate risk but to isolate its blast radius.

Your Next Steps: From Case Study to Strategy

Reflecting on these examples is the first step. The next is translating these insights into a pragmatic action plan for your own organization. Before writing a single line of new code, engineering leaders must address three critical questions:

- What is our single biggest architectural bottleneck? Is it deployment velocity, team contention, scaling limitations, or something else? Be specific and quantify the pain. This defines your primary objective.

- Which domain is the safest and most valuable to decouple first? Identify a “seam” in your monolith that is relatively isolated and whose migration would provide a meaningful, measurable win. This will be your pilot project.

- Is our team culturally and technically ready? Do we have the skills in API design, containerization, and distributed monitoring? More importantly, are we prepared to shift to a culture of decentralized ownership and accountability?

Ultimately, a microservices migration case study serves as a map, showing both the treasure and the dragons. The journey from monolith to microservices is not guaranteed, nor is it universally the correct path, as Prime Video’s experience proves. It is a strategic investment in organizational agility and technical resilience, demanding careful planning, executive buy-in, and an unwavering focus on the business outcomes that justify the immense effort involved.

Navigating the complexities of vendor selection and migration strategy is a significant challenge. Modernization Intel provides data-driven research and vendor shortlists to help you de-risk your technology decisions. Explore our in-depth analysis to build a migration plan based on proven outcomes, not marketing hype. Modernization Intel

Need help with your modernization project?

Get matched with vetted specialists who can help you modernize your APIs, migrate to Kubernetes, or transform legacy systems.

Browse Services